Shared Memory Clusters: Observations On Capacity And Response Time

Our marketing friends correctly observe that Shared-Memory Clusters (SMCs) can provide scads of processors and buckets full of memory, all the while with the programming and often performance efficiency benefits of large SMPs. Some may add that SMCs don’t have the relatively harder boundaries for data sharing that network-based distributed-memory clusters do have. From this they can observe that with that flexible of a scale-out capability, along with having the entire SMC be a form of an SMP from a programming point of view, why would you care about the capacity of a single node?

Why indeed.

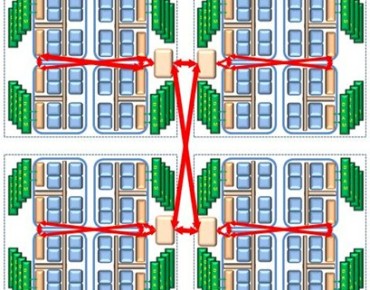

This is fair, up to a point, but capacity where you want it to be is sometimes really needed. As we have seen in other articles of this series, SMCs should not be treated as vanilla SMPs. Increased probability of local access provides more capacity to the entire system and better execution speeds for individual tasks.

Let’s consider a case where you have intentionally created one particular hot node doing most of the processing, using the far memory of multiple nodes as just another level of the storage hierarchy, avoiding the need to instead access disk. You did not really need all of the SMC system’s compute capacity, but you did want the processors you were using to be close to each other. Our marketing friends – not knowing about NUMA like you do – may observe that any processor provides the same capacity and single-threaded performance as any other.

We have also likely got a mental picture in our head that any time a processor is being used by a task (a.k.a., a thread), all of the capacity of that processor is being consumed. No other task can be on that processor at that same time so the other task(s) are just waiting. Our mental picture would also show that anytime a longer latency access to far memory is occurring versus a shorter latency local access, that executing task will need to stay on that processor to complete its work. Picture this as far accesses consuming more processing capacity than local accesses.

This mental model is only partially correct, and indeed, mostly incorrect. Far accesses do take more time and so do slow the execution speed of individual tasks, but compute capacity is not proportionally consumed. This article is intended to explain that anomaly and help with identifying the trade-offs between distributed systems, large SMPs, and these newer SMCs.

We know that with network-based distributed-memory systems, the actual data movement between nodes is driven by a non-processor DMA (Direct Memory Access) engine. Processors tell that DMA engine to asynchronously read that data from its local node and transport the data across a link. Over there, on the other side of that link, another DMA engine arranges to write that data into the memory of its local node. Completion of both the read and write operation is known by the system processors via an interrupt generated by the DMA engine(s). Of course, the processors consume a fair amount of processing capacity setting up for these DMA operations, but it is the DMA engine that is waiting on the memory accesses.

With SMCs we are often expecting the internode data movement to be driven synchronously by processors rather than asynchronous I/O-based data movers. As I will discuss later, occasionally these processors are pushing the data into some target node, which can have less of an impact on capacity. But, for this article, let’s have all of the internode accesses be pulls; pulls from a far node’s memory into the cache of a local processor.

We know that even for a cache fill – a pull – from local memory into a cache can take hundreds of processor cycles. We also know that a cache fill from remote memory can take still longer. During these times, you can think of that processor as simply waiting. [It happens that that is not completely correct, but for simplicity in this discussion let’s agree it is true.] A processor doing nothing, nothing except maintain a task dispatched there, is still perceivable as compute capacity being consumed. No work is being accomplished aside from waiting on the data of a cache fill. Now consider the wait for a cache fill from the longer latency of far memory; one can claim that the compute capacity lost is very considerably larger as a result.

Fortunately, it is not.

Even before this additional wait on far memory, processor designers recognized that any cache miss to memory was a sizable loss on the capacity of processors; the processor was not executing instructions and other tasks might be waiting to do so. This became even more true as these very same processors became capable of executing multiple instructions per cycle. These designers also recognized that if, during such cache misses, a processor core were to execute a completely independent instruction stream of another task, that core could accomplish work on behalf of that other task that would otherwise need to wait.

Making sure these cores get work done while waiting is the motivation behind simultaneous multithreading (SMT), also called HyperThreading by Intel.

One or more threads can be dispatched to the same core and these really do execute the independent instruction streams in parallel. When one pauses to wait on a cache fill, the other thread(s) essentially consume the compute capacity that would have been used by the now waiting thread. Said differently, the threads still executing instructions effectively speed up during this short time.

So what does this mean to the extra storage latency normally associated with far storage accesses?

Each far storage access still does take longer; the response time of any thread making a far access is slowed as a result. But during that same period of time, that longer period associated with a far access is nonetheless consumable by another thread. Response time for the one thread doing the far access is impacted certainly, but the compute capacity of that same processor core, that same node, is still consumable. It does not matter that another thread (or more) is not at that moment dispatched to that core; in such a case, the capacity still remains available.

As a bit of a warning shot, though, the measurement of CPU utilization on some operating systems does not take this available capacity into account. Some forms of measurement of CPU utilization merely indicate that a core is completely used (and so 100 percent utilized, 0 percent available) any time that even one thread is assigned to a core; having two or more executing, and so one or more executing during a cache miss, is not taken into account. The point is this: SMT-based capacity might well exist even when the CPU utilization says that the processors are 100 percent utilized.

Articles In This Series:

Shared Memory Clusters: Of NUMA And Cache Latencies

After degrees in physics and electrical engineering, a number of pre-PowerPC processor development projects, a short stint in Japan on IBM’s first Japanese personal computer, a tour through the OS/400 and IBM i operating system, compiler, and cluster development, and a rather long stay in Power Systems performance that allowed him to play with architecture and performance at a lot of levels – all told about 35 years and a lot of development processes with IBM – Mark Funk entered academia to teach computer science. [And to write sentences like that, which even make TPM smirk.]He is currently professor of computer science at Winona State University. If there is one thing – no, two things – that this has taught Funk, they are that the best projects and products start with a clear understanding of the concepts involved and the best way to solve performance problems is to teach others what it takes to do it. It is the sufficient understanding of those concepts – and more importantly what you can do with them in today’s products – that he will endeavor to share.