VMware Takes On Hyperconvergence Upstarts With EVO:RAIL

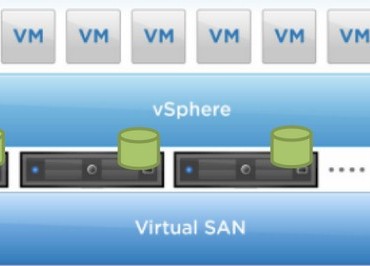

For the past several years, VMware has concentrated on turning server virtualization into clouds, and it left one of its flanks unguarded as it coped with the onslaught of CloudStack and OpenStack and the rise of Microsoft's Hyper-V and Red Hat's KVM as rival virtualization tools. That flank was virtualized and clustered storage, the kind that Nutanix, SimpliVity, Scale Computing, and others have cooked up to provide virtual storage area networks running on the same clusters as virtual machines and obviating the need for actual and usually expensive SANs – like the ones sold by VMware's parent company, EMC, for instance.

VMware saw the threat and reacted to it 18 months ago, Mornay Van Der Walt, vice president of emerging solutions, explains to EnterpriseTech, and the resulting solution, called EVO;RAIL, announced today at the VMworld extravaganza in San Francisco, is designed to blunt the attack of the rival hyperconvergers, who usually support their server-storage hybrids atop VMware's own hypervisor, oddly enough.

Rumors have been going around about "Project Marvin" since late last year, and there has been much chatter about whether or not VMware would be delivering its own hardware appliance running a hyperconverged software stack including tightly integrated virtual servers and virtual SAN software. VMware does not want to be in the hardware business, so this never seemed likely, but rumors about "Project Mystic" hardware appliance created with EMC kept surfacing, and there were other rumors about "Project Starburst" appliances as well coming out from other partners. As it turns out, they are all talking about the same product, which was just given different code names for different hardware partners to create a Borg shield. They were all talking about Project Marvin, and VMware is most certainly not getting into the hardware business. It is, however, working with a slew of hardware partners to create a line of hyperconverged appliances that will run the EVO:RAIL software.

With the launch of Virtual SAN software last fall and its delivery this spring, VMware had most of the pieces to use as a foundation of its own hyperconverged platform. And as EnterpriseTech pointed out in the spring when the Project Marvin rumors were going around, if VMware didn't figure out how to glue these two virtualization stacks together into a harmonic whole, someone else surely would have. So 18 months ago, Van Der Walt was given the task of turning the idea of a hyperconverged VMware platform into a reality.

Hyperconvergence is a natural progression of server virtualization, and the name EVO:RAIL reflects this in its name. The EVO is short for evolution, and the RAIL refers to the rails in a server rack that hold an enclosure. (It would have been truly funny if VMware called it vHyper, with the little v it usually has in front of product names and Hyper standing for hyperconverged. But Microsoft, which uses Hyper-V for its hypervisor name, probably would not have been amused. And even though everybody calls the storage software vSAN or VSAN, you will note that VMware always calls it Virtual SAN.) Server, storage, and switch technology has been converging for the past five years, so this is not a new idea. All of the tier one server makers were converging servers and switching with blade architectures, and Cisco Systems arguably pushed the integration a bit further with its Unified Computing System machines more than five years ago and its tight integration with VMware's ESXi hypervisor.

VMware has pretty much owned the market for X86 server virtualization among enterprise customers for the past decade, advancing its products with security and networking abstraction layers and cloud computing extensions to take on other cloud platforms. But it left its virtual storage flank exposed and another breed of systems software makers have forged so-called hyperconverged systems, which bring virtual servers and virtual storage area networks together to run on the same X86 clusters. These upstarts are now attracting big gobs of venture capital and are on the hockey stick growth path, and they threaten VMware's hegemony in the datacenter.

The EVO:RAIL software stack starts with the ESXi hypervisor, which VMware hasn't mentioned by name in years but is not only the heart of its vSphere stack but is where nearly all of the features of vSphere actually live. It also includes vCenter Log Insight, which is a log management tool with a log analysis tool that leverages machine-learning algorithms to offer proactive maintenance of virtual server clusters. A new piece of software, called the EVO:RAIL engine, orchestrates and automates the hyperconverged software, and its interface is written in HTML5 and has what Van Der Walt calls "anti-wizards," meaning that there is not a Next or Continue or whatever button and that you just tell it to do things and then they happen. The stack includes the Linux variant of the vCenter console for ESXi management, and vCenter runs on all of the nodes in the appliance. One key unlocks all of the software in the EVO;RAIL stack, and as nodes are added to a cluster, they are automatically discovered and added to the virtual server and storage pool.

"A kid should be able to deploy the EVO:RAIL and not know anything about virtualization," says Van Der Walt.

The stack also obviously includes the Virtual SAN software, which is buried inside of the ESXi 5.5 hypervisor that was launched last summer. There is no new ESXi version or release at this year's VMworld, which is a break with tradition and which just goes to show that the virtual compute and networking parts are maturing fast and now moving slower. VMware contends that putting its Virtual SAN software inside of the hypervisor, it has significant performance advantages over competitors, who have to run their virtual storage inside of guest partitions on the hypervisors they support. Time will tell if this is true or not, and no doubt once the EVO:RAIL stack is out there will be some bakeoffs that will give us some insight.

The Virtual SAN software that was previewed with ESXi 5.5, which came out in September last year, is being refreshed with Update 2 of the hypervisor. Update 1 came out in March of this year and Update 2 is debuting at VMworld with this set of announcements. As far as we know, VMware is not offering further scalability on Virtual SAN, which is technically what is called a distributed object store, and it can span up to 32 server nodes to deliver 4.4 PB of capacity on machines using 4 TB disk drives. That top-end Virtual SAN can push up to 2 million I/O operations per second (IOPS) on 100 percent random read workloads and about 640,000 on a mix of 70 percent random reads and 30 percent random writes on 4 KB file sizes. Such a 32 node cluster is capable of supporting about 3,200 virtual machines, VMware said earlier this year when Virtual SAN became generally available.

For whatever reason, and Van Der Walt did not explain, VMware is capping the EVO:RAIL hyperconverged platforms at 16 nodes instead of 32 nodes, and that means the cluster has the potential to drive 1 million IOPS on a completely random read workload and 320,000 IOPS on the 70/30 mixed workload with the appropriate disks and processors. Such a cluster could, in theory, host 2.2 PB of capacity using 4 TB SATA drives, and with 6 TB drives, you could push that up to 3.3 PB. That is a fair amount of capacity for a pod of 16 servers, and the odds are that most Virtual SAN customers are not going to push the capacity that hard. It is far more likely that they will add flash storage to disk storage in the server nodes and use the flash cache features of Virtual SAN to boost the performance of the distributed object store. Van Der Walt also strongly suggests that customers use 10 Gb/sec Ethernet links between the server nodes in any Virtual SAN setup to get respectable performance between the nodes, although you can link out to the outside world using slower Gigabit Ethernet links provided you have enough of them on the server nodes.

Just because Virtual SAN can be pushed that hard on 16 nodes does not mean VMware is doing this with EVO:RAIL. In fact, the storage is relatively modest, and that is due in part to the relatively skinny server nodes it is using as a basis of the appliance.

The EVO:RAIL hardware is based on a 2U chassis and includes four half-width nodes in each enclosure. This is the only hardware configuration that VMware is allowing for its hyperconverged franchise partners to use, so you can forget about running this on Cisco UCS blades or IBM (soon to be Lenovo) Flex System or Dell PowerEdge M or Hewlett-Packard Moonshot or BladeSystem machines. Supermicro's Twin2 servers fit the bill, and so does Dell's PowerEdge C6220. Cisco doesn't do multimode rack servers in its UCS C Series line, and IBM does not provide such boxes in its System x line, either. HP's SL2500 packs four nodes in a 2U chassis, so they can be EVO:RAIL machines.

The suggested processor in each node is a six-core "Ivy Bridge" Xeon E5-2620 v2, and the node has to support up to 192 GB of main memory. The RAID disk controller in the enclosure has to support pass-through capabilities, which is a requirement of Virtual SAN, and VMware is similarly setting the flash and disk capacity and performance levels. As you can see, the EVO:RAIL appliances use 1.2 TB SAS disks spinning at 10K RPM to store data and a 400 GB solid state drive for use as a read/write cache for Virtual SAN. The boot drive for ESXi can be a 146 GB SAS drive spinning at 10K RPM or a 32 GB Serial ATA Disk on Module (SATADOM).

The scalability of the EVO:RAIL setup is not as impressive as Virtual SAN itself, scaling to about 14.4 TB of raw capacity and 13 TB of usable capacity per node based on the disk drives that VMware is prescribing. That is only 78 TB of capacity for the Virtual SAN spanning 16 nodes, and that is not very much capacity at all. Presumably VMware will not only create a 32-node variant of the EVO:RAIL that doubles the scalability up to 800 virtual machines for generic cloud workloads and 2,000 virtual desktops, but will also find fatter nodes with more disk capacity for storage-intensive workloads. The 1.2 TB drives are not going to cut it, and nodes are going to need a lot more drive bays for storing lots of data.

VMware is not providing specific pricing for the EVO:RAIL setup at the moment, and part of the reason is that it will not be generally available until later this year. (Precisely when is not clear, all VMware says is the second half of 2014, which we have been in for nearly two months already.)

"It will be priced competitively to the players that are in the space today, and we think we will be priced just right to compete in the hyperconverged market," Van Der Walt says, adding that including hardware, software, and support over a 36-month term, the numbers will work out to under 10 cents per hour per virtual machine.

VMware is not setting the pricing on the EVO:RAIL appliances, so it has to be a bit cagey about it. But if you work the math backwards on that, at 10 cents per hour, a 16-node appliance with 400 generic cloud virtual machines would cost just over $1 million. Presumably Van Der Walt did not mean the VDI images, which would push the price up to $2.6 million for the same setup. Even that would be too high for VMware. But that said, it is hard to figure how this even gets to $1 million. The vSphere Enterprise hypervisor bundle costs $2,875 per socket and vSphere Enterprise Plus costs $3,495 per socket. Virtual SAN costs $3,595 per socket. Take the worst case pricing here and you are talking about $7,090 per socket. The 16-node cluster running just these two pieces of software has a license and support cost that is close to $400,000 over three years. The rest is presumably made up in hardware, hardware support, and the EVO:RAIL engine and other VMware components to reach that $1 million figure. There is probably some margin in there for the hardware appliance partners, too.

Looking ahead, VMware will add features to EVO;RAIL to federate pools of resources running in remote or branch offices that allow them to be managed from the same console as back in the datacenter. Integration with VMware's Horizon View VDI broker did not happen with this release, but it is coming in the future. And so probably is integration with the XenDesktop VDI broker from Citrix Systems, which is popularly deployed on top of ESXi, although Van Der Walt did not confirm this speculation on our part. We expect some high capacity and high performance variants of EVO:RAIL, and would not be surprised to see an all-flash variant as well as one with lots and lots of disks.