Scaled Storage Boosts Climate Modeling Efforts

Climate research projects based on big data analysis increasingly rely on big storage to track historical patterns and extrapolate future trends in a research area with countless variables. The open source nature of climate data has made access to huge volumes of archival a key challenge for storage technology.

Hence, IBM and other storage vendors are frequently working with research institutes and commercial weather forecasters to get a handle on vast quantities of climate data. In the latest instance, IBM said it is working with the German Climate Computing Center to manage the world's largest climate simulation data archive.

The widely used archive currently holds 40 petabytes of climate data and is expected to grow by about 75 petabytes annually over the next five years, researchers said. As supercomputers are used to perform sophisticated climate simulations, the German climate center is using a High Performance Storage System (HPSS) developed by the U.S. Energy Department and IBM.

The system is designed to scale using varying storage devices, tape libraries and processors connected by local-, wide- and storage-area networks, IBM said. Along with a scalable datastore capability, I/O performance can be scaled to hundreds of terabytes a day.

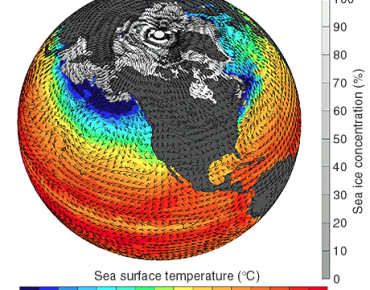

The German climate center's research focuses on complex simulations based on numerical models of the global climate system. Accurate climate simulations require ready access to huge datastores to compute the three-dimensional circulation of the atmosphere and ocean along with interactions with ice and land.

IBM said its storage system is capable of handling more than 500 petabytes of climate data.

The upgrade of the German climate center’s Hierarchical Storage Management system includes x86 servers and Red Hat Linux operating system running IBM's HPSS software. This set up manages simulation data and serves as the input and output interface between the center’s high performance computing systems and the tape storage library where climate data is stored, IBM said.

Also used is a disk cache of five petabytes of storage capacity capable of providing data access speeds of up to 12 gigabytes per second. That throughput will be upgraded to 18 gigabytes per second later this year, IBM said.

The IBM-Energy Department storage system was developed over the last two decades under a program with U.S. weapons labs managed by the Energy Department. Among other applications, the labs relied on supercomputers and large data storage systems to simulate nuclear weapons tests.

Among the simulation, modeling and visualization projects conducted by the German climate center are high-definition modeling of cloud formation and precipitation used to improve the accuracy of weather and climate prediction. Indeed, the U.S. is playing catch up when it comes to developing more accurate forecasting models. The U.S. Air Force announced recently it was adopting a U.K. forecasting model as a way to improve weather prediction and save operational costs.

IBM along with Amazon Web Services, Google Cloud Platform, Microsoft and the Open Cloud Consortium recently signed on U.S. project sponsored by the National Oceanic and Atmospheric Administration to develop computing and storage infrastructure needed to share access to more than 20 terabytes of satellite weather data collected each day. Such efforts could help boost the forecasting accuracy of U.S. weather models. Supercomputing and scaled storage capabilities will go a long way toward improving forecasting as the number of extreme weather events increases.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).