QNIB’s Kniep: Docker Disruption, Containers in HPC, and ISC

As more enterprises tie their datacenters to Linux containers, interest in market juggernaut Docker grows. Indeed, more than 70 percent currently use or are evaluating Docker, according to the 2015 "State of Containers." And only 7 percent of those virtualization and cloud professionals surveyed had never heard of it, the StackEngine study found.

It's not all good news, however. Enterprises voice concern over the security model and a lack of operational tools for production, according to the report. While many organizations have adopted Docker or containers, their role often is restricted to development or quality assurance. Increasingly, though, enterprises plan to roll it into production and need experts to implement these plans.

That's one reason ISC Cloud & Big Data expects an enthusiastic turnout for the conference's Docker workshop, occurring on Sept. 28 from 2:00 pm to 6:00 pm. The event, held at the Marriott Hotel in Frankfurt, runs from Sept. 28 to Sept. 30, with keynotes from Dr. Jan Vitt of DZ Bank (who recently was interviewed by EnterpriseTech) and Professor Peter Coveney of University College London. In preparation for the upcoming conference and show, ISC Events sat down with Christian Kniep, the HPC architect for QNIB Solutions. Kniep is co-organizer and speaker at the conference's Docker workshop, occurring on Sept. 28 from 2:00 pm to 6:00 pm.

Following are excerpts from the interview between ISC and Kniep:

ISC: Can you give us a high-level description of what Linux containers do and how they work?

Christian Kniep: Linux containers are groups of processes that are not able to see – let alone interact – with processes and their resources (mount, network, etc.) outside of the scope of the group itself. They communicate solely via sys-calls issued against the host system. The implementations leverage kernel namespaces inside the Linux kernel, which allows an efficient scheduling of resources and demarcation. Early implementations like ‘Jails’ (BSD); ‘Zones’ (Solaris), and OpenVZ ran copies of the host systems user-land (libraries & binaries). The recent wave of Linux containers took this approach a bit further by allowing the use of different Linux flavors on top of any host system.

By using overlaid file-systems, Linux containers furthermore provide a layered user-land, which leads to a flexible and efficient packaging and distribution of container images like never before.

ISC: What are their basic advantages compared to hypervisors and what makes the technology especially applicable to HPC?

Kniep: Traditional virtualization emulates a complete hardware interface and the guest is going to follow the same boot process as physical machines (BIOS- >MBR->Kernel->Init-System->...), thus the boot process takes a fair amount of time. Containers, on the other hand, are just forked with some special flags and therefore started and stopped within a fraction of a second. By using an overlaid file system the distribution of images is far more effective then the old fashion way of exporting a virtual machine to a big binary blob and pushing it over the wire. If the upmost layer is changed (e.g., a configuration change or a package installation) only this layer is going to be transferred. Another important aspect – certainly within the HPC community – is the fact that containers do not introduce a hypervisor and therefore no performance penalty. Quite the opposite, since the user-land within the container can be optimized for a specific workload and beat the bare metal performance, as shown by multiple studies in the past.

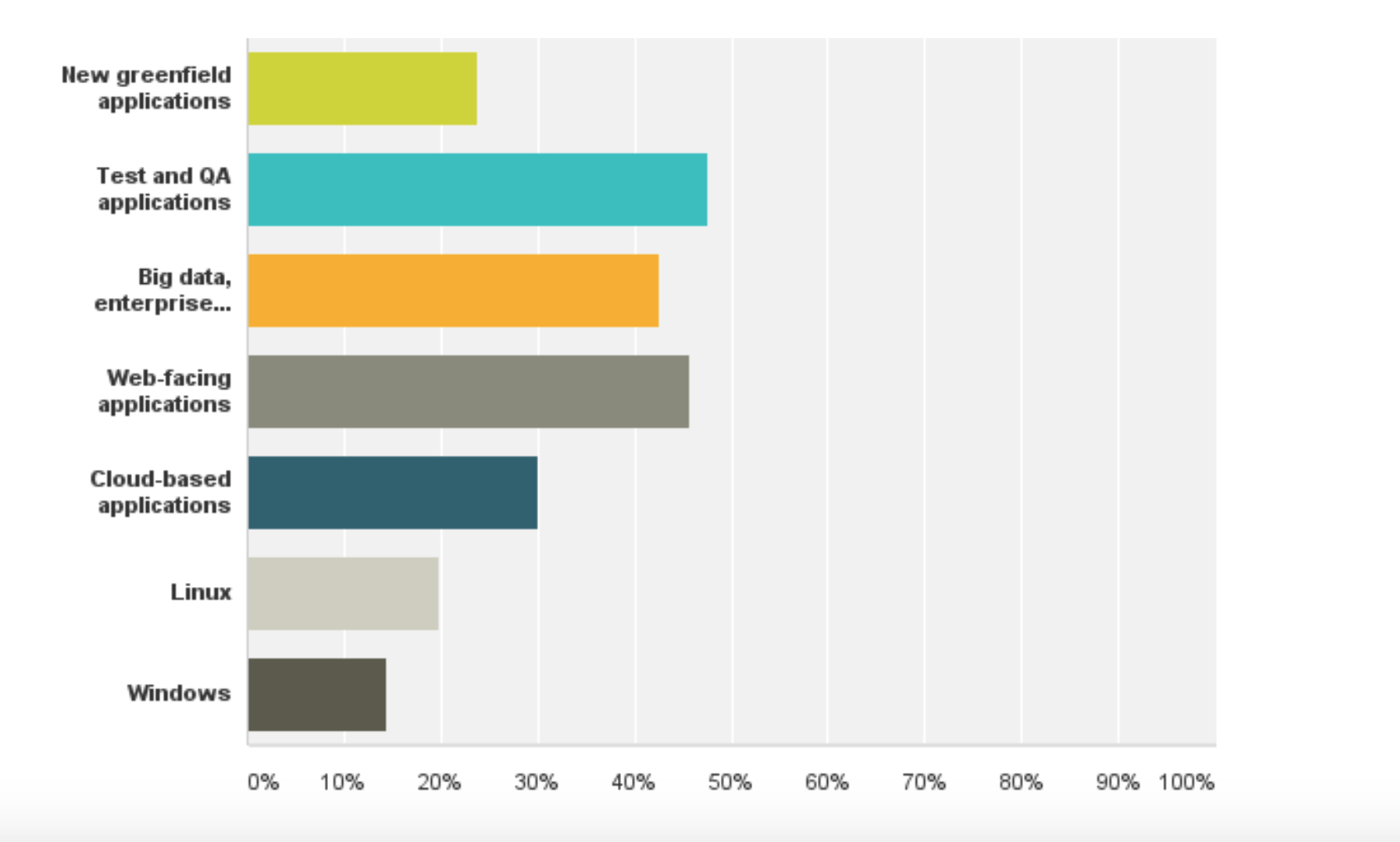

Where are you using or planning to use Docker over the next year? (Check all that apply)

(Source: StackEngine)

ISC: Containers were almost unknown outside the computer research community until last year. Why do you think the technology has generated so much interest so quickly?

Kniep: The momentum gained by Docker in particular is outstanding, which illustrates how an open, use-case driven development can thrive. By focusing on the main objectives, incorporating feedback fast, and not hesitating to set aside corner-cases and aspects like security in the beginning (while being open about it), the pace was unbelievable. Docker was driven out of the need to squeeze as much encapsulated services on each (rented) machine as possible and this is what leads to the use-case driven development we enjoy today.

ISC: Do you think the availability of containers will change the way HPC cloud infrastructure is built and how it is used?

Kniep: The encapsulation of different services or applications will impact private on-premise clouds and public once with multi-tenant access. The cloud infrastructure is becoming a workload independent commodity. By encapsulating HPC workloads into Linux containers the actual place were they are processed is going to be irrelevant.

Granted, that the Data Gap and other barriers have to be overcome for this to be the case, as e.g., the UberCloud project has pointed out. I expect these barriers to be out-maneuvered one use-case at a time. The TCO (and paper trail) of on-premise infrastructure versus on-demand resources is a strong driver of change and will push the use-cases into the cloud. The adoption of container is already strong in the software development and hyper-scale world, since it provides a incredible iteration speed and scaling options. The compute part will follow.

ISC: Can containers be used outside conventional cloud environments – for example, to provide a virtual software environment for an application running beside native applications running on a regular cluster?

Kniep: It certainly can and will! In particular the use of Linux containers to provide a reproducible, accountable environment is one of the main drivers behind Linux containers. This new approach is going to make the deployment of HPC environments as easy as using an official container image (e.g., provided by the ISV) or create a custom one. Goodbye the times in which all users and operational personnel had to settle for a common denominator in terms of installed libraries on the bare metal installation of the compute nodes.

Operations and compute are becoming independent from each other, which is going to be a huge win in itself. The old pledge of traditional virtualization to provide an environment that spans from a laptop to a full-fledged cluster is closer then never before.

ISC: Will Docker be the de facto standard for Linux containers or are there other implementations that could emerge as worthy contenders?

Kniep: Docker is currently the implementation with the most momentum among all contenders. Since it made containers easy to pack, distribute, and run on all Linux distributions it certainly deserves the spotlight. Prior variants (like LXC) used to be cumbersome to manage. But history taught the community that competition alongside cooperation is a booster for technology. Docker released their core engine recently and teamed up with the second most popular competitor CoreOS (among others) to form the Open Container Initiative, which aims to standardize the building blocks of Linux containers. It’s hard to predict what the ecosystem will look like even in the short term, but the fundamentals of using kernel namespaces to isolate and CGroups (which I not even touched on) to restrict resource use will stick. How containers are orchestrated at scale, what other namespaces will emerge (Mellanox recently pushed a RDMA namespace to allow more fine grained use of InfiniBand hardware), and which implementation will fit the different use-cases out there will remain to be seen in the future.

Related

Managing editor of Enterprise Technology. I've been covering tech and business for many years, for publications such as InformationWeek, Baseline Magazine, and Florida Today. A native Brit and longtime Yankees fan, I live with my husband, daughter, and two cats on the Space Coast in Florida.