The Case for ‘Inexactness’ in Supercomputing

Scientific computing must become less rigid and power hungry as researchers seek new ways to model and understand the real world, a climatologist argues.

In a commentary published this week in the journal Nature, climate researcher Tim Palmer asserted that "we should question whether all scientific computations need to be performed deterministically — that is, always producing the same output given the same input — and with the same high level of precision. I argue that for many applications they do not."

Furthermore, he argued that excessive power demands would slow the emergence of exascale computing.

"The main obstacle to building a commercially viable 'exascale' computer is not the flop rate itself but the ability to achieve this rate without excessive power consumption," Palmer noted, citing preliminary estimates that exascale machines would consume about 100 megawatts, or the output of a small power station. Hence, a key challenge is making exascale computers more energy efficient.

Palmer, a research professor of climate physics and co-director of the Oxford Martin Program on Modeling and Predicting Climate at the University of Oxford, predicted "substantial" demand for new supercomputer designs that combine conventional processors with "low-energy, non-deterministic processors, able to analyze data at variable levels of precision."

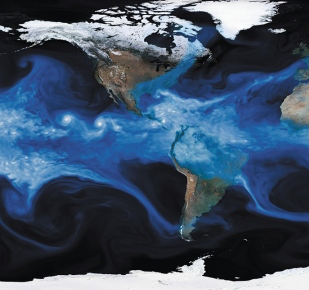

The growing computing requirements of climate modeling are a case in point. Researchers are seeking ever-higher resolution, or detail, in their climate models. Palmer noted that current climate simulations could resolve large weather systems but not individual clouds. The requirement illustrates the level of detail researchers are seeking as they struggle to understand the dynamics of climate change.

Resolution is a function of available computing power. Next-generation exaflop supercomputers will be able to resolve large thunderstorms, Palmer noted, but "cloud physics on scales smaller than a grid cell will still have to be approximated, or parameterized, using simplified equations."

Hence, the researcher concludes that power-hungry exascale computers with 64-bit precision may represent overkill for climate modelers and other researchers. "It is a waste of computing and energy resources to use this precision to represent smaller-scale circulations approaching the resolution limit of a climate model," Palmer argued.

"Inexact hybrid computing has the potential to aid modeling of any complex nonlinear multi-scale system."

The drive for exascale computing based on an energy-intensive, "deterministic" approach also may be creating bottlenecks. Palmer suggested that one way around this was to estimate how much "computational inexactness and indeterminism" could be tolerated in new supercomputer designs.

Supercomputer manufacturers driven by commercial pressures "should begin by marketing processors and scientific computing libraries that make efficient use of mixed-precision representations of real-number variables," Palmer concluded. "We must embrace inexactness if that allows a more efficient use of energy and thereby increases the accuracy and reliability of our simulations."

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).