Volatile Energy Sector Eyes ‘Information Fusion’

Given the current state of the global energy sector—including the nation's largest coal mining company filing for bankruptcy protection this week—no current operator can afford to drill a dry well. Given the high stakes, the oil and gas industry is embracing new HPC techniques that now include information fusion and metadata analytics.

Practitioners of these approaches speaking at the recent Strata+Hadoop World conference defined information fusion as "providing contextual insight on fast, streaming data and big, static data by enabling metadata analytics." Hadoop and Spark are among the primary tools for developing next-generation fusion systems as advanced sensor networks within the industrial Internet of Things (IoT) are deployed, experts note.

But there's a rub: "Much of seismic analysis involves data formats and algorithms that do not lend themselves to modern parallel architectures," noted Brian Clark, vice president of project management at Objectivity Inc. and Marco Ippolito, a data model architect with Paris-based geophysical services company CGG GeoSoftware.

"By adopting technology to support a parallel seismic format and a parallel access pattern, a Lambda-compliant framework could provide the ability to leverage all available data and perform more analysis in less time, thereby achieving more accurate scientific results," Clark and Ippolito asserted.

The Lamdba-based approach, in which stream processing applications are built on top of MapReduce, for example, could be used to explore the use of data from well sensors and other industrial IoT devices, they noted.

The parallel seismic use case faces familiar big data challenges: growing data volumes and a proliferation of data formats on the business side along with what Clark and Ippolito called "decimated" datasets due to increased volumes. Other technical challenges for the oil and gas sector include lack of bandwidth between data storage and processing and "noise" in the expanding big data vendor market.

Those challenges underscore the need for a Lambda-based stream processing architecture that leverages Spark streaming, for example, to deliver sensor metadata to a big data analytics platform. Moreover, the Lambda architecture would be designed to run on existing hardware clusters as a way to offset the rising costs associated with growing data volumes.

Meanwhile, information fusion represents the third generation of fusion systems that began with data, then sensor fusion. The industrial IoT, social media and the proliferation of sensor networks and unstructured data are driving the transition to information fusion.

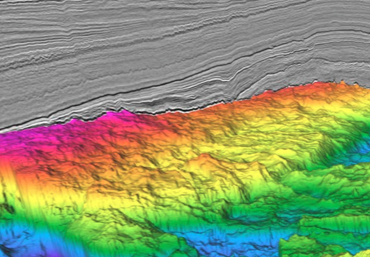

The ingredients of information fusion in the oil and gas sector include seismic data, lithology, geology (including "rock physics") and other metrics that are used to come up with well production forecasts. Metadata like time, geo-location, data quality along with errors and anomalies are extracted to improve production forecasts.

The partners said their goal is "high-performance distributed database platforms for relationship discovery." The information fusion platform delivering metadata analytics would draw on decades of experience working with petabyte or more data volumes along with Objectivity's Spark-based architecture called Thingspan.

The company said its distributed information fusion platform combines Apache Spark and other open source big data technologies with its object data modeling technology to help boost well production.

The partners also aim to leverage the emerging information fusion approach as a way to bring a semblance of certainty to an energy sector that they stress will remain "volatile."

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).