No Waiting: ANSYS Adds Cycle Orchestration for Enterprise Cloud HPC

The waiting is the hardest part.

When design engineers need to run complex simulations, too often they find that the HPC resources required for those workloads are already taken. The problem: most on-prem data centers are provisioned for steady-state, not high-demand, needs. When demand increases and HPC resources aren’t available, the engineer puts in a request with the job scheduler. Here, to paraphrase the opening of “Casablanca,” the fortunate ones, through money, or influence, or luck, might obtain access to HPC resources. But the others wait in scheduling limbo. And wait, and wait, and wait….

Now ANSYS, the popular CAE software vendor whose users increasingly turn to HPC for complex simulation workloads, has partnered with Cycle Computing and its CycleCloud software to leverage dynamic cloud capacity and auto-scaling. CycleCloud will provide HPC orchestration for ANSYS’s Enterprise Cloud HPC offering, an engineering simulation platform delivered on Amazon Web Services. CycleCloud enables cloud migration of CAE workloads requiring HPC, including storage and data management and access to resources for interactive and batch execution that scales on demand.

According to ANSYS, more customers are turning to the cloud as the locale for the full simulation and design life cycle.

“We have periods when we have a need for many more cores than our data centers can manage,” Judd Kaiser, ANSYS cloud computing program manager, told EnterpriseTech. “Or we’re moving to increasingly variable workloads and we're looking to cloud now as a possible solution. On the other end, we have customers who are growing into HPC, who’d like to take advantage of HPC, but building a data center isn’t their core business, so they want to know how they can use cloud to their advantage.”

“We have periods when we have a need for many more cores than our data centers can manage,” Judd Kaiser, ANSYS cloud computing program manager, told EnterpriseTech. “Or we’re moving to increasingly variable workloads and we're looking to cloud now as a possible solution. On the other end, we have customers who are growing into HPC, who’d like to take advantage of HPC, but building a data center isn’t their core business, so they want to know how they can use cloud to their advantage.”

Cycle addresses both needs, he said.

“We didn’t have much experience in provisioning cloud resources and managing HPC on cloud infrastructures, and that’s what Cycle brought to the table,” he said. “ANSYS Enterprise Cloud, is intended to be a virtual simulation data center, it just happens to be backed on public cloud hardware. It means we can provision for a customer and they can have it up and running next week, serving the needs of dozens of engineers running very large workloads. If that same customer asked us for a recommendation of what we need for a data center, from specs for the system, to ordering the hardware, to rack and stack and installing software and rolling it out to the engineers, that typically takes many months.”

CycleCloud is intended to ensure optimal AWS Spot instance usage and that appropriate resources are used for the right amount of time in the ANSYS Enterprise Cloud. With CycleCloud handling auto-scaling, he explained, “the engineer submits a job to the cluster…and the cluster scales up to meet the demands of the job. So resources are provisioned specifically to serve the needs of that individual job, the job runs almost immediately, and then when it’s complete those resources are decommissioned.”

Keith said there already is some misunderstanding that the combined ANSYS-Cycle offering targets burst-to-the-cloud demand situations.

“It’s more than that,” he said. “People imagine burst capabilities…, it sounds great. They think: ‘I have an on-prem job, I’ll submit it to the cloud and when it’s done I’ll bring it back.’ But therein lies the problem: Bringing it back.”

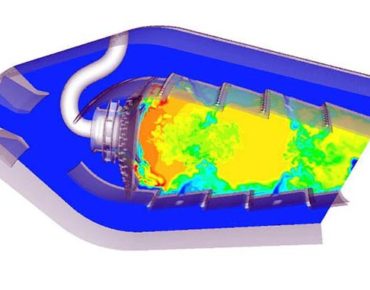

Not only do ANSYS jobs use a significant amount of compute resources, he said, but once that job is complete the resulting data set can be extremely large. “So if the idea is to bring that data set back on prem and finish the simulation process there…, for most of our software that’s done interactively. You get the data, you load it onto a graphical workstation, you slice and dice it…and extract the useful information. That last part is graphical in nature. So if your vision is to launch to the cloud for the HPC and then bring the results back, you’ve got a data transfer problem. Our results files are routinely huge.”

The answer, he said, it to conduct the entire simulation process in the cloud. “Without moving the data after it’s computed, you spin up a graphical workstation in the cloud and do your post processing with the data in place, still in the cloud. You’re using some sort of thin client locally to interact with the software, but it’s all physically running in the cloud.”