Facebook Datacenters Get ‘Express Backbone’

(Supphachai Salaeman/Shutterstock)

Hyper-scalers are being forced to become more creative in breaking network bottlenecks as they cope with greater bandwidth demand for storage backup of video and other "rich" content along with growing processing requirements across far-flung datacenters running analytics and machine learning applications.

Among the latest efforts to expand datacenter backbones is Facebook's new long-haul network designed to replace its single wide-area network known as "classic backbone" while linking U.S. and European datacenters.

In a blog posted on Monday (May 1), the social media giant (NASDAQ: FB) described a souped-up "Express Backbone" designed over the past year and now running in production. Not only is the EBB a bigger pipe, its also adds new networking capacity as Facebook expands its datacenter footprint. Further, the scalable backbone incorporates new traffic engineering capabilities for routing internal server traffic at scale, the company reported.

Facebook engineers Mikel Jimenez and Henry Kwok also noted a substantial learning curve while building and implementing the new network. "While we uncovered new challenges, such as going through growth pains of keeping the network state consistent and stable, the centralized real-time scheduling has proven to be simple and efficient," they claimed.

Other design challenges included the roll out of new software components such as a controller, traffic estimator and agents along with "debugging inconsistent states across different entities, debugging packet loss and diagnosing bad LSPs," or layered service providers.

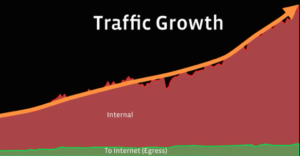

Facebook's earlier backbone implemented over the last decade connected multiple datacenters in the U.S. and Europe, carrying user, or what the company call's "egress," traffic along with internal server-to-server traffic. The proliferation of video and photo content forced network engineers to come up with a new network topology that would allowed for incremental deployment of new features and code updates, "preferably in fractional units of network capacity to allow for fast iterations," Jimenez and Kwok stressed.

More efficient use of network resources also was a design priority, along with maintaining a "lean network state" by leveraging multiprotocol label switching for segment routing at the network edge.

The number of network prefixes in the Express Backbone was limited to thousands, allowing the designers to use commodity network equipment. Meanwhile, the network topology was divided into four parallel topologies, or "planes,"—an architecture based on Facebook's current datacenter network fabric.

An explosion of video and other network traffic along with analytics and machine learning applications drove the Facebook networking effort. (Source: Facebook)

Meanwhile, an internal distributing networking platform called Open/R was used in the backbone's final version, "integrating the distributed piece with the central controller," the Facebook engineers explained. Introduced last year, Open/R was initially used as a "shortest path routing system" for Facebook's Terragraph wireless network.

Hence, the new datacenter network afforded engineers the opportunity to scale Open/R to forge high-bandwidth links between datacenters while anticipating infrastructure expansion. In practice, the LSP agents detect network topology changes in real-time via Open/R's message bus and then react locally. A central controller also used Open/R agents for determining the network's state.

Among the new features to be added the new Express Backbone is the ability to "throttle" network traffic to avoid bottlenecks, or, as the Facebook engineers put it, "so that congestion can be avoided proactively, as opposed to managed reactively."

The new network is among several Facebook projects designed to boost capacity in its sprawling complex of datacenters. For example, last fall the company said it was testing packet-optical switches in datacenters operated by development partner Equinix (NASDAQ: EQIX).

The partners said the open source effort targets new datacenter architectures that would support fifth-generation, or 5G, networking, Internet of Things deployments and other future datacenter use cases.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).