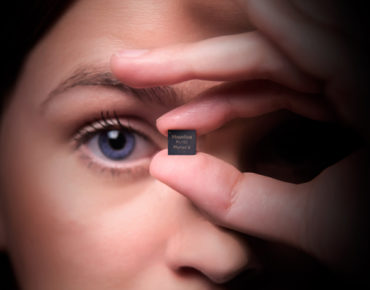

Intel Debuts Myriad X Vision Processing Unit for Neural Net Inferencing

The Movidius™ Myriad™ X VPU delivers artificial intelligence at the edge for drones, robotics, smart cameras, virtual reality/augmented reality solutions and more. Introduced on Aug. 28, 2017, Myriad X is the world’s first SOC shipping with a dedicated Neural Compute Engine to give devices the ability to see, understand and react to their environments in real time.(Credit: Intel Corporation)

Intel today introduced the Movidius Myriad X Vision Processing Unit (VPU) which Intel is calling the first vision processing system-on-a-chip (SoC) with a dedicated neural compute engine to accelerate deep neural network inferencing at the network edge. You may recall Intel acquired Movidius roughly a year ago for its visualization processing expertise. Introduction of the SoC closely follows release of the Movidius Neural Compute Stick in July, a USB-based offering “to make deep learning application development on specialized hardware even more widely available.”

Intel says the VPU’s new neural compute engine is an on-chip hardware block specifically designed to run deep neural networks at high speed and low power. “With the introduction of the Neural Compute Engine, the Myriad X architecture is capable of 1 TOPS – trillion operations per second based on peak floating-point computational throughput of Neural Compute Engine – of compute performance on deep neural network inferences,” says Intel.

Commenting on the introduction Steve Conway of Hyperion Research said, “The Intel VPU is an essential part of the company’s larger strategy for deep learning and other AI methodologies. HPC has moved to the forefront of R&D for AI, and visual processing complements Intel’s HPC strategy. In the coming era of autonomous vehicles and networked traffic, along with millions of drones and IoT sensors, ultrafast visual processing will be indispensable.”

Intel reports the new Movidius SoC VPU is capable of delivering more than 4 TOPS[i] of total performance and that its tiny form factor and on-board processing are ideal for autonomous device solutions. In addition to its neural compute engine, Myriad X combines imaging, visual processing and deep learning inference in real time with:

Intel reports the new Movidius SoC VPU is capable of delivering more than 4 TOPS[i] of total performance and that its tiny form factor and on-board processing are ideal for autonomous device solutions. In addition to its neural compute engine, Myriad X combines imaging, visual processing and deep learning inference in real time with:

- “Programmable 128-bit VLIW Vector Processors: Run multiple imaging and vision application pipelines simultaneously with the flexibility of 16 vector processors optimized for computer vision workloads.

- Increased Configurable MIPI Lanes: Connect up to 8 HD resolution RGB cameras directly to Myriad X with its 16 MIPI lanes included in its rich set of interfaces, to support up to 700 million pixels per second of image signal processing throughput.

- Enhanced Vision Accelerators: Utilize over 20 hardware accelerators to perform tasks such as optical flow and stereo depth without introducing additional compute overhead.

- 2.5 MB of Homogenous On-Chip Memory: The centralized on-chip memory architecture allows for up to 450 GB per second of internal bandwidth, minimizing latency and reducing power consumption by minimizing off-chip data transfer.”

Remi El-Ouazzane, former CEO of Movidius and now vice president and general manager of Movidius, Intel New Technology Group, is quoted in the announcement release: “Enabling devices with humanlike visual intelligence represents the next leap forward in computing. With Myriad X, we are redefining what a VPU means when it comes to delivering as much AI and vision compute power possible, all within the unique energy and thermal constraints of modern untethered devices.”

Certainly neural network technology and product development are moving quickly on both on the training and inferencing fronts. It seems likely there will be a proliferation of AI-related “processing units” spanning chip-to-system level products as the technology takes hold both inside datacenters and on network edges. Google, of course, has introduced the second generation of its Tensor processing unit (TPU), Graphcore has an intelligent processing unit (IPU), and Fujitsu has a deep learning unit (DLU).

El-Ouazzane has written a blog about the new SoC in which he notes, “As we continue to leverage Intel’s unique ability to deliver end-to-end AI solutions from the cloud to the edge, we are bound to deliver a VPU technology roadmap that will continue to dramatically increase edge compute performance without sacrificing power consumption. This next decade will mark the birth of brand-new categories of devices.”

According to Intel, key features of the neural compute stick, aimed at developers, include:

- Supports CNN profiling, prototyping, and tuning workflow

- All data and power provided over a single USB Type A port

- Real-time, on device inference – cloud connectivity not required

- Run multiple devices on the same platform to scale performance

- Quickly deploy existing CNN models or uniquely trained networks

Link to El-Ouazzane’s blog: https://newsroom.intel.com/editorials/introducing-myriad-x-unleashing-ai-at-the-edge/

Link to announcement of Movidius USB stick: https://www.intelnervana.com/introducing-movidius-neural-compute-stick/

[i] Architectural calculation based on the maximum operations-per-second performance over all compute units