Google Is Latest ‘Big Three’ Cloud Provider to Offer V100 GPUs

Google announced today that Nvidia Tesla V100 GPUs are now available in public beta on its Google Cloud Platform (GCP), accessible through its infrastructure-as-a-service offering Compute Engine and on Kubernetes Engine, its managed environment for deploying containerized applications. The cloud giant also announced general availability of Nvidia’s previous-generation P100 parts, in public beta on Google’s platform since September 2017.

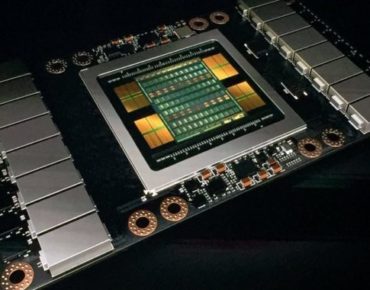

The Tesla V100 is Nvidia’s highest-end datacenter GPU, aimed at high-performance computing and machine learning workloads. The first product based on the Volta architecture, unveiled by Nvidia in May 2017, the Tesla V100 for NVLink offers 125 teraflops of mixed-precision floating point, 15.7 teraflops of single-precision floating point, and 7.8 teraflops of double-precision floating point performance. The NVLink 2.0 interconnect provides up to 300GB/s of GPU-to-GPU bandwidth, nearly 10x the data throughput offered by traditional PCIe, boosting performance on deep learning and HPC workloads by up to 40 percent, according to Nvidia.

A single virtual machine supports up to eight Nvidia Tesla V100 GPUs, 96 vCPUs and 624GB of system memory, enabling 1 petaflops of mixed-precision performance on account of the chip’s 5,120 CUDA cores and 640 Tensor cores. Currently, users can only select one and eight-GPU configurations; support for two or four attached GPUs is planned.

While Google was the first of the big three public cloud providers to embrace P100s, it was the last to adopt V100s. Amazon Web Services has offered the Volta parts since October 2017. Microsoft Azure followed with a private preview in November 2017. And IBM brought PCIe variant V100s into its cloud in January of this year.

One wonders if Google’s delay was orchestrated to give its proprietary Tensor Processing Units (TPUs) some time to shine. Google revealed the specialized machine learning silicon one year ago and debuted its second-gen TPUs in its cloud in February. [Here’s an interesting comparison of the TPU and V100 on a popular deep learning benchmark.]

The V100 GPUs are available in the following Google cloud regions: us-west1, us-central1 and europe-west4. The GPU hourly price is $2.48 per for on-demand VMs in U.S. regions, with preemptible VMs listed at half that price. GPU instances are billed by the second, and sustained use discounts of up to 30 percent apply for GPU on-demand machines only.

Google advised that customers have the option of deploying different GPUs depending on their workload, storage requirements and price considerations. “If you’re seeking a balance between price and performance, the Nvidia Tesla P100 GPU is a good fit,” said Google Product Managers Chris Kleban and Ari Liberman in a blog announcing the hardware additions. “You can select up to four P100 GPUs, 96 vCPUs and 624GB of memory per virtual machine. Further, the P100 is also now available in europe-west4 (Netherlands) in addition to us-west1, us-central1, us-east1, europe-west1 and asia-east1.”

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.