Nvidia Unifies AI, HPC Workloads in Datacenters

Nvidia’s latest cloud server platform is intended as a “building block,” in the reference design sense, to support AI training and inference along with HPC workloads such as simulations.

The GPU vendor (NASDAQ: NVDA) introduced its latest server platform dubbed HGX-2 on Wednesday (May 30) during a company roadshow in Taipei, Taiwan. Nvidia said the cloud server can be throttled up or down to support precision HPC calculations from 32-bits for single-precision floating point format, or FP32, up to double-precision FP64.

Meanwhile, AI training and inference workloads are supported with FP16, or half precision, along with Int8 data. The combination is designed for varying processing requirements for a growing number of enterprise applications that combine AI with HPC, the company noted.

The HGX-2 is based on Nvidia’s Tensor Core GPUs that target AI-powered deep learning applications ranging from autonomous driving to natural speech. Released a year after the unveiling of its first HGX platform, Nvidia said the second iteration serves as a building block for manufacturers combining AI and HPC. The company claimed AI training speeds of up to 15,500 images per second based on the ResNet-50 training benchmark.

"What accelerated computing is all about is the full stack—creating a new type of processor that fuses HPC and AI, optimizing across the full stack, developing switches, developing motherboards, developing systems, system software, APIs and libraries,” noted Nvidia CEO Jensen Huang.

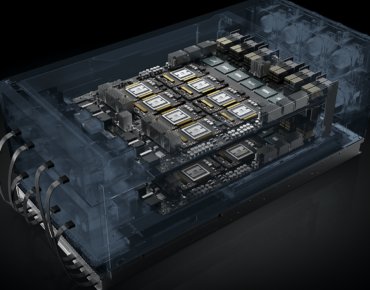

The platform incorporates Nvidia’s NVSwitch interconnect fabric used to link 16 Tesla V100 Volta GPUs. The combined system capable of delivering 2 petaflops of “AI performance” serves as the basis of Nvidia’s recently announced DGX-2 deep learning platform.

Targeting the growing number of AI workloads handled in data centers, Nvidia also said four server makers—Lenovo (HKG: 0992), QCT, Super-micro and Wiwynn—would release systems later this year based on the HGX-2. Meanwhile, the Taiwanese electronics manufacturing giant Foxconn (TPE: 2354) is among a group of equipment makers planning to release cloud datacenter systems based on the new Nvidia server platform.

Those manufacturers joined other server makers and datacenter operators that have deployed the initial HGX architecture, including Amazon Web Services (NASDAQ: AMZN), Facebook (NASDAQ: FB) and Microsoft (NASDAQ: MSFT).

Nvidia asserts its “unified computing platform” addresses the requirements of server manufacturers seeking to align datacenter infrastructure by offering a mix of CPUs, GPUs and interconnections for training, inference and HPC workloads. In a testimonial, Ed Wu, a corporate executive vice president at Foxconn, said the HGX-2 would help it “fulfill the explosive demand [for datacenter processing] from AI” and deep learning.

--Tiffany Trader contributed to this report.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).