Guidelines for Getting Going with AI and Advanced Analytics

These are exciting times for anyone yearning to drive significant business value from masses of data collected on the cloud. We finally have the processing power, software frameworks and appetite of the masses to conduct most of their daily transactions on the internet. But there are pitfalls to beware of when using AI to extract full value from data. This article offers suggestions for avoiding them for executives responsible for implementing (or benefitting from) AI.

Let’s start with examples of AI analytics done right1:

- Plexure, which provides software to help retailers drive more in-store transactions, helped McDonald's realize a 700 percent increase in a European location in targeted offer responses by connecting customer buying patterns with context data, such as location, time of day and weather.

- T-Mobile increased its conversion rate by 38 percent and revenue per user by 12 percent using machine learning-based micro segmentation of target customers for the next best offer.

- Under Armour increased revenue by 51 percent using IBM Watson cognitive computing.

- Outdoor sportwear provider Peter Glenn increased order size by 30 percent using AgileOne advanced analytics.

- Telenor, a major Scandinavia and Asia mobile operator, reduced length of service calls by 30 percent using machine learning insights from network data.

Because we all want results like this, it’s not surprising that analytics is the top-ranked IT investment around the world. Yet most companies (roughly 75 percent2) are still in the early planning phase. That's not necessarily bad — AI is a minefield for the unprepared, and those who have incorporated AI admit that problems stem from lack of organizational readiness, not the technology. So my advice after many years around AI technology usage is a spin on the carpenter's old rule: "measure twice and invest once."

You need to plan carefully based on unique, measured parameters for your company, ecosystem, business needs, skill sets and IT infrastructure. There are some guiding questions that will help you create a customized plan for your particular journey — kind of like using GPS to find your unique destination (Figure 1).

Plan Your Journey and the Arrival

In undertaking an analytics and AI journey, you really need to define what the “arrival” should look like. Start with a precise statement of your business goals. Organizations use analytics to gain customer insights, help manage risk, improve operations, inform their strategy, and more. Part of defining your goal is to understand the questions you are trying to answer with data:

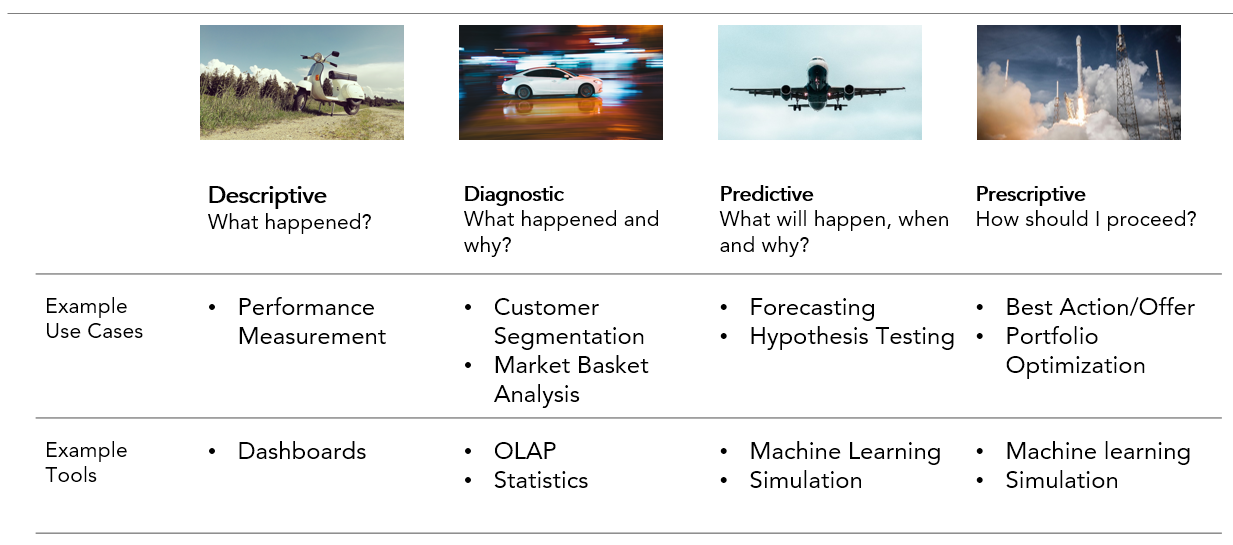

- Descriptive analytics—what has happened?

- Diagnostic analytics—why did it happen?

- Predictive analytics—what will happen and why?

- Prescriptive analytics—how should we proceed?

Figure 2. Applications of AI Analytics and associated technology (reproduced from R&D 100 Conference).4

In the above, but the level of sophistication grows as you move to the right, so companies often start with descriptive and move in stages to prescriptive analytics.

Get Your Pit Crew and Key Stakeholders on Board

You’ll need specialized talent to make your analytics journey successful. But data scientists by themselves will not be able to extract and deliver all the value so here are different roles you need in your teams:

- Data Scientists transform data into insights using math, statistics, analytical and visualization tools.

- Data Engineers create a high-quality data pipeline from multiple data sources using database and programming skills.

- Subject Matter Experts articulate the business use cases based on their domain, market and business expertise.

- Business Translators are individuals who are both data savvy and have business expertise. They are the key interface between data scientists and the organization’s decision makers to make sure concepts are mutually understood, and that shared business objectives are clear.

Cultural resistance to change is often the number one challenge in implementing new technology. There are other challenges, as well, such as poor understanding of data as an asset, lack of business agility and weak technology leadership. An effective analytics team needs a proactive strategy to overcome organizational inertia:

- Create common terminology (business translators can help here)

- Drive toward a culture of data enablement and literacy

- Reward staff based on a shared goal

- Plan ahead to minimize "ad hoc" changes

- Measure processes to collect objective data — but beware of too many metrics; focus on a specific use case or business goal

There are many ways to integrate new analytics staff into an organization, e.g., embedding them in a business unit or IT department, creating a new department, or creating a cross-organizational virtual team. The best approach is organizationally dependent, so follow the path of least resistance and shortest time to impact. Start with a small project and well-defined goals and see where the "gravitational pull" leads you, i.e., where the best engagements and relationships form. Then replicate this "natural" organizational structure as you scale up.

Use High-Octane Fuel

Your analytics engine will need large amounts of data to keep it running smoothly — but not just any raw data. You need high quality data gleaned from a variety of sources: both structured data from your ERP and CRM systems, unstructured data from web pages, mobile devices, social media and, increasingly, from the Internet of Things. You need to bring together all of this internal siloed data along with external data, in all of their various formats.

This means your analytics team will need to design a system to prepare all your raw data for training. Data scientists work with subject matter experts to define the logical relationships among data types, identify incomplete records, remove data outliers, fix sampling bias, and make other refinements. This discipline, called "feature engineering,"5 makes use of math, statistics, and data visualization tools to enhance and enrich the data. Your data engineers will apply the transformations defined by data scientists to create a high-throughput data pipeline from all the data sources using their IT, database and programming skills.

This is a big job – data gathering and data prep can consume more than 60 percent of your analytics efforts. How much data are we talking about? As storage providers will tell you with a smile, the sky’s is the limit. Here's a sample of daily data volume from several common sources6:

- Average Internet User: 1.5 GB

- Autonomous Vehicle: 4 TB

- Connected Airplane: 5 TB

- Smart Factory: 1 PB

- Cloud Video Provider: 750 PB

| Recommendations for AI Analytics Success

· Slow down to speed up your analytics journey. · Have a crisp business goal as your North Star for data and infrastructure decisions. · Focus on integrating your analytics team with rest of organization. · Plan to spend the majority of your time on data and infrastructure preparation. · Start with pilots and leverage existing infrastructure for fastest time to results. · Compute, storage and network resources are interdependent — co-design them as a system. · Measure success and calibrate your infrastructure needs before investing big. |

Yes, the data floodgates are open wide! So I warn you: unless you have unlimited IT budget: Resist the temptation to hoard data! Within a large organization it’s tempting to focus only on your own sphere of data and analytics to maintain control and get faster results. But there are hidden bumps down the road. It’s expensive to turn low quality data into useful data. Data scientists are expensive and in short supply, so finding ways to share both data and the cost of preparation is smart.

More importantly, you need common methods and governance mechanisms to make sure your company-wide data lakes don’t get thrown together into an out-of-control data swamp. This takes cross-company planning and discipline to define how data will be gathered, prepared, shared, integrated and maintained.

Issues to address:

- Common definitions and relationships

- Company policies related to firewalls and protecting sensitive data

- Broad training on proper data handling, making it part of the corporate culture

- How to deal with regulatory frameworks, such as GDPR and HIPPA

Tune Your Engine

With such a huge volume of data, it’s imperative that you also plan your IT infrastructure to handle the coming workload. With user demands growing at 40 percent per year7 and IT budgets growing at a meager 3 percent8, this is a huge efficiency challenge. Data analytics impacts all three areas of IT resources: storage, moving it where it’s needed (networking) and extracting insights (compute power). Fortunately, there are new technologies that address these needs.

Hierarchical storage can provide high capacity and high performance at lower cost by integrating SSD and HDD technologies into an efficient system. SSDs provide 100X performance, half the power consumption and better reliability, but the cost is higher. The key is to combine SSDs and HDDs in the right proportion to meet your requirements at the lowest cost. In the same vein, fast persistent memory can provide a less expensive option to DRAM to meet your peak processing demands.

Advances in storage performance (SSDs) are shifting IT bottlenecks to the network. While throughput of the fastest SSDs have improved by 2500X, typical network speed has only increased by a factor of 100X. This can cause underutilization of high-performance storage.

Network issues to investigate as you plan your analytics infrastructure:

- Pay attention to both latency and bandwidth, especially for streaming analytics use cases.

- Design to support peak bursts, typical for big data workloads that have "scatter-gather-merge" data access patterns.

- Pay attention to network "jitter" (latency variation); attaining deterministic latency is sometimes more important than improving average latency.

- Storage control software can mask storage hardware latency gains, so measure first to identify the real bottleneck before investing in network upgrades.

Finally, make sure you explore disaggregated and composable IT infrastructure. This involves breaking traditional servers into blocks of pure storage and compute resources that can be dynamically composed in the proportions needed for each workload, avoiding overprovisioning. This approach increases IT agility as demands change while reducing overall costs.

You now have a choice of general purpose (CPU) and specialized processors, such as FPGAs, ASICs, and GPUs. The right solution depends on peak performance requirements, operational costs of managing homogeneous vs. heterogeneous infrastructures, and the cost of new capital assets. As you start your AI journey, run pilots and evaluate performance needs. This will help you achieve the fastest time to results, while preventing overinvestment and lock-in before you’ve got your feet firmly planted.

My final recommendation is to measure every step of the way to know if you’re getting to the right destination. Start small, fail fast (i.e., learn fast), keep iterating, prove success, and then by all means GO BIG!

References:

1"Market Trends: Maximizing Value From Analytics, Artificial Intelligence and Automation in CSP Contact Centers," Gartner, August 2018.

2"Market Guide for ML Compute Infrastructure," Gartner, Sept. 2018.

3Source: Intel Corporation.

4"Best Practices in Analytics: Integrating Analytical Capabilities and Process Flows," Bill Hostmann, Gartner, March 27 2012.

5"Understanding Feature Engineering (Part 1) — Continuous Numeric Data," Dipanjan (DJ) Sarkar, Toward Data Science.com, Jan. 2018.

6"Data Center First: Intel’s Vision For A Data-Driven World," Data Center Frontier, Rich Miller - February 13, 2017. Compiled by Intel from various sources.

7"The Internet of Things (IoT) units installed base by category from 2014 to 2020 (in billions)," Statistica.com, 2018.

8"Gartner Worldwide IT Spending Forecast," Gartner, Q1 2018.

Figen Ülgen, PhD, is general manager of the Rack Scale Design Group within the Datacenter Division at Intel Corporation, and a co-founder of the award-winning OpenHPC Community under the Linux Foundation.