New Chips from AWS: Arm-based General Purpose and ML Inference

The “x86 Big Bang,” in which market dominance of the venerable Intel CPU has exploded into fragments of processor options suited to varying workloads, has now encompassed CPUs offered by the leading public cloud services provider, AWS, which today announced it has further invested in its Graviton Arm-based chip for general purpose, scale-out workloads, including containerized microservices, web servers and data/log processing.

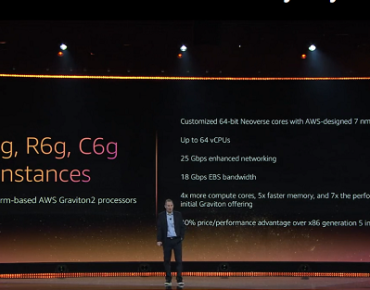

Announced today by Andy Jassy, AWS CEO, at his organization’s sprawling re:Invent conference in Las Vegas, the new Graviton2 second-generation 7nm AWS cloud processor chip is based on 64-bit Arm Neoverse cores and, according to Amazon, delivers up to 40 percent higher throughput from comparable x86-based instances at 20 percent lower cost. The company said Graviton2 has 2x faster floating-point processing per core for scientific and high-performance workloads, and it can support up to 64 virtual CPUs, 25 Gbps of networking and 18 Gbps of EBS bandwidth.

The Graviton chips are the creation of engineers at Israeli-based Annapurna Labs, acquired by Amazon nearly five years ago. Graviton, which Jassy said was the first Arm-based processor in a public cloud at the time of its launch a year ago, is intended to take on a wide variety of workloads typically handled by x86 processors. The new processor grew from AWS’s decision that “if we wanted to push the price performance envelope, it meant that we had to do some innovating ourselves,” Jassy said. “And so we took this to the Annapurna team and we set them loose on a couple of chips that we wanted to build that we thought could provide meaningful differentiation in terms of performance and things that really mattered.”

Nothing that Graviton2 can deliver up to 7x the performance of Graviton A1 (first gen) instances, AWS Chief Evangelist Jeff Barr said in a blog today that additional memory channels and double-sized per-core caches accelerate memory access by up to 5x. He said AWS plans to use Graviton2 to run Amazon EMR, Elastic Load Balancing, Amazon ElastiCache and other AWS services.

Barr said AWS is working on three types of Graviton2-powered EC2 instances (the d suffix indicates NVMe local storage):

- General Purpose (M6g and M6gd) – 1-64 vCPUs and up to 256 GiB of memory.

- Compute-Optimized (C6g and C6gd) – 1-64 vCPUs and up to 128 GiB of memory.

- Memory-Optimized (R6g and R6gd) – 1-64 vCPUs and up to 512 GiB of memory.

He said the instances will have up to 25 Gbps of network bandwidth, 18 Gbps of EBS-Optimized bandwidth and will also be available in bare metal form.

Jassy also announced the launch of another Annapurna-built chip that he said takes on the most expensive, if less discussed, aspect of machine learning implementations: inferencing. The company announced Inf1 instances in four sizes (see image) powered by AWS Inferentia processors. The largest instance offers 16 chips, providing more than 2 petaOPS of inferencing throughput for TensorFlow, PyTorch and MxNet inferencing workloads. “When compared to the G4 instances, the Inf1 instances offer up to 3x the inferencing throughput, and up to 40 percent lower cost per inference,” Barr said.

“If you do a lot of machine learning at scale and in production,” Jassy said at re:Invent, “…you know that the majority of your costs are in the predictions, are in the inference. And just think about an example – I'll take Alexa as an example. We train that model a couple times a week. It's a big old model, but think about how many devices we have everywhere that are making inferences and predictions, every minute, that 80 to 90 percent of the cost is actually in the predictions. And so this is why we want to try and work on this problem. You know, everybody's talking about training, but nobody is actually working on optimizing the largest cost … with machine learning.”

“If you do a lot of machine learning at scale and in production,” Jassy said at re:Invent, “…you know that the majority of your costs are in the predictions, are in the inference. And just think about an example – I'll take Alexa as an example. We train that model a couple times a week. It's a big old model, but think about how many devices we have everywhere that are making inferences and predictions, every minute, that 80 to 90 percent of the cost is actually in the predictions. And so this is why we want to try and work on this problem. You know, everybody's talking about training, but nobody is actually working on optimizing the largest cost … with machine learning.”