Kubernetes Gets a GPU Boost

An infrastructure vendor zeroing in on AI and machine learning workloads has added GPU support to a Kubernetes-based appliance designed to handle those emerging applications in containers while adding GPU horsepower to scale in-house workloads to the cloud.

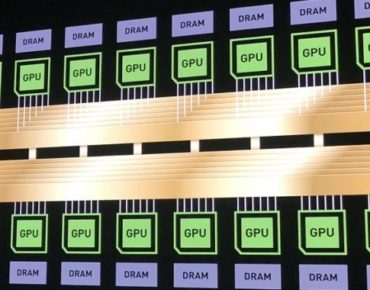

Diamanti, a five-year-old startup that recently closed a $35 million funding round, unveiled this week what it claims is the first enterprise platform with GPU support via Nvidia’s (NASDAQ: NVDA) interconnect technology for running application containers orchestrated on Kubernetes clusters. The container infrastructure vendor recently released its Spektra platform that adds GPU capacity in cloud clusters as a way to scale on-premise workloads to public clouds.

The combination also is intended to accelerate model development and training, the San Jose-based company said Monday (Dec. 9).

The platform is positioned as a way to add GPU acceleration for hybrid infrastructure that is increasingly shifting to Kubernetes to handle AI and machine learning workloads, according to Tom Barton, Diamanti’s CEO.

The GPU-Kubernetes platform supports Nvidia’s NVLink interconnect technology along with the Kubeflow machine learning framework for Kubernetes. That combination provides greater availability for machine learning pipelines and Jupyter notebooks used to share live code.

Market analysts note that Kubernetes continues to gain momentum among data scientists as a way to orchestrate distributed architectures used to run multiple machine learning frameworks and libraries at scale in production settings. That option allows for quick scaling up or down for large simulations, they note.

Diamanti’s platform is touted as allowing AI application running as container workloads to shift across on-premises infrastructure and public clouds. The Spektra x86 platform moves applications and data between Kubernetes clusters as needed, creating a GPU-backed Kubernetes service.

The company said early access customers for its platform include financial services and energy companies.

The addition of GPU support is seen as a way of boosting Kubernetes capabilities for handling the growing volume of AI and other high-end computing workloads. That approach is part of a steady push toward deploying machine learning models on GPU-enabled Kubernetes clusters.

“Enterprises deploying AI workloads at scale are using a combination of on-premises datacenters and the cloud, bringing the AI models to where the data is being collected,” Nvidia noted in a recent blog post describing deployments of its EGX platform via enterprise Kubernetes offerings.

Diamanti’s approach also underscores how Kubernetes is entering the enterprise IT mainstream as a de facto infrastructure service layer. That infrastructure also increasingly relies on GPU accelerators to handle model training and inference.

“Regardless of where the physical infrastructure is located or who provides it, applications are adopting cloud native principles and are deployed using Kubernetes as the common layer of abstraction,” Diamanti noted in a blog post last month unveiling its Spektra platform.

The platform extends bare-metal container technology to add consistency across Kubernetes clusters operating between private and public clouds, the company said.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).