Deep Neural Networks are Easily Fooled – Here’s How Explainable AI Can Help

Many deep neural networks (DNNs) make their predictions from behind an incomprehensible “black box,” which leads domain experts to question the internal reasoning behind why the model made certain decisions. What features were considered with what weightage, and how do these factors affect the final prediction?

This is exactly the problem Explainable AI (XAI) tries to solve. XAI focuses on making machine learning models understood by humans. Explainability may not be very important when you are classifying images of cats and dogs, but as ML models are being used for bigger and more important problems, XAI becomes critical. When you have a model deciding whether to approve a person for a bank loan, it is imperative that the decision is not biased by irrelevant or inappropriate factors such as race.

Deep neural networks are widely used in various industrial applications such as self-driving cars, machine comprehension, weather forecasting and finance. However, black box DNNs are not transparent and can be easily fooled.

Recently, researchers at McAfee were able to trick a Tesla into accelerating to 85 MPH by simply using a piece of tape to elongate a horizontal line on the “3” of a 35 MPH speed limit sign. In what other ways can DNNs be fooled?

One-pixel attack: Researchers changed only one-pixel value in an image and fed it to deep neural networks trained on the CIFAR dataset. With just a small change, the DNNs were easily deceived as seen in figure 1 (Jiawei Su, 2019).

Figure 1: The true class labels are written in black, and the DNN predicted class labels with confidence. Scores are below in blue.

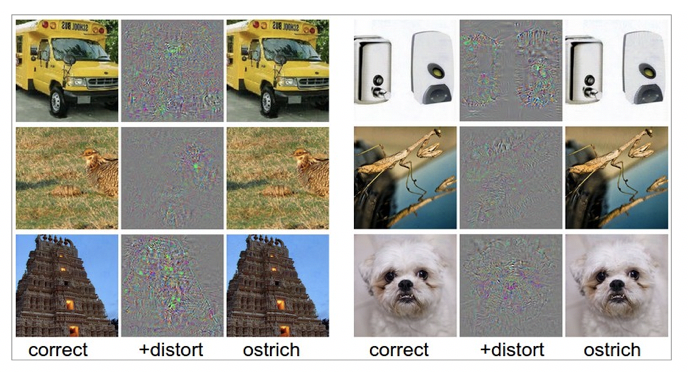

Image distortions: Some DNNs work extremely well, surpassing human performance, especially with visual classification tasks. However, researchers have proven it is possible to alter the output of state-of-the-art convolutional neural networks (a class of deep neural networks commonly applied to image analytics) by adding image distortion that is imperceptible to human eyes (Fergus, 2014).

Figure 2: Real image (left), a tiny distortion (middle), distorted image (right), and incorrect prediction (bottom).

As shown in figure 2, a very small distortion led to the DNN, AlexNet, to classify every image as an ostrich with the highest degree of probability.

In another example, scientists used high-performance CNNs trained on the ImageNet and MNIST datasets and fed them noisy images that humans cannot recognize. Surprisingly, the CNNs labeled them as random objects with 99 percent confidence (Anh Nguyen, 2015).

Learning inappropriate rules: AI models trained on movie reviews, for example, may correctly learn that words such as “horrible,” “bad,” “awful” and “boring” are likely to be negative. However, a model may learn inappropriate rules as well, including reviews containing the name “Daniel Day-Lewis,” which as overwhelmingly positive.

Artifacts in data collection: Artifacts of data collection often can set off unwanted correlations that classifiers learn during training. It can be very difficult to identify these correlations just by looking at the selected images. For example, researchers trained a binary classifier using logistic regression on 20 images of husky dogs and wolves. All wolf images had snow in the background, but the husky dog images did not. After testing 60 new images, every image that had snow or a light background was labeled as a wolf, regardless of animal shape, size, color, texture or pose. (Marco Tulio Ribeiro, 2016).

Bias: Bias might be one of the highest-profile issues with DNN systems. The Amazon AI recruiting tool that was biased against women immediately comes to mind. An example of racially biased AI is criminal prediction software that mistakenly codes black individuals as high-threat even when they are, in fact, low theat.

With the many examples of DNN systems being easily fooled due to biased or manipulated training data, it’s obvious that the intelligence of AI systems cannot be measured by just calculating accuracy or F1 score. It’s time to make the “black box” more transparent.

Why Do We Need Explainable AI?

“AI is the new electricity” – Andrew Ng

The performance of deep neural networks is becoming essential as electricity, reaching or even exceeded human performance in a variety of complex tasks. In spite of general success, there are many highly sensitive fields such as healthcare, finance, security and transportation where we cannot blindly trust AI models.

The goals of explainable AI systems are:

- Generating high performance, explainable models.

Creating easily understandable and trustworthy explanations.

There are three crucial blocks to develop explainable AI systems:

- Explainable models – The first challenge in building explainable AI systems is developing new or modified machine learning algorithms capable of maintaining high performance while generating understandable explanations, a balance that is quite difficult to strike.

- Explanation interface – There are many state-of-the-art human computer interaction techniques available to generate powerful explanations. Data visualization models, natural language understanding and generation, conversational systems can be used for the interface.

- Psychological model of explanation – Because human decision-making is often unconscious, psychological theories can help developers as well as evaluators. If users can rate the clarity of generated explanations, the model can be continuously trained on the unconscious factors that lead to human decisions. Of course, bias will need to be monitored to avoid skewing the system.

Balancing performance with explainability

When it comes to XAI models, there is a tradeoff between performance and explainability. Logistic and linear regression, naive bayes and decision trees are more explainable because the decision-making rules are easily interpretable by humans. However, deep learning algorithms are complex and hard to interpret. Some CNNs have more than 100 million tunable parameters. It is challenging even for researchers to interpret these algorithms. As a result, high performing models are less explainable and highly explainable models have poor performance. This can be seen in figure 8.

Explainability can be a mediator between AI and society. It is also an effective tool for identifying issues in DNN models such as artifacts or biases in the training data. Even though explainable AI is complex, it will be a critical area for focused research in the future. Explanations can be generated using various model-agnostic methods.

As AI becomes increasingly important in highly sensitive areas such as healthcare, we need to hold its decision-making accountable to human scrutiny. Though some experts doubt the promises of XAI, today’s “black box” has the potential to become Pandora’s Box if left unchecked.

Luckily, we are beginning to develop the tools and expertise to avoid that reality and instead create “glass boxes” of transparency.

--Shraddha Mane is a machine learning engineer at Persistent Systems.