Nvidia Makes Its Enterprise AI Software Generally Available to Users

After months in early release, Nvidia today announced the general availability of Nvidia AI Enterprise, a new software offering that’s designed to bring AI capabilities to the masses via VMware’s vSphere. The announcement also includes precertification of AI Enterprise running on a handful of industry-standard X64 servers (equipped with GPUs, of course), as well as a partnership with Domino Data Labs for MLOps.

“AI is real and it has real value,” said Manuvir Das, Nvidia’s head of enterprise computing. Das knows it’s real because Nvidia has helped thousands of customers deploy AI into their operations.

However, AI has also proven to be difficult to implement, he said. “And the reason is because, on the one hand, it’s an end-to-end problem, from the acquisition of data to the training to produce models and then deploy the models to production,” Das said.

“But it’s also a top-to-bottom, full-stack problem, because you have to think about the hardware, the software, the frameworks to make it easy to deploy AI, as well as an ecosystem of ISVs who can take AI and put it in the hands of the customer,” he continued.

That, in a nutshell, is why Nvidia developed AI Enterprise. Now available as a 1.0 release, AI Enterprise is a suite of tools, tools, technologies, and frameworks designed to make it easier for companies to bring AI capabilities into the hands of customers.

AI Enterprise itself has three main elements, according to Das.

First, it includes the RAPIDS software development kit (SDK), which provides accelerated data science on GPUs. Second, Nvidia has enabled popular machine learning frameworks like TensorFlow and PyTorch to run atop GPUs. Thirdly, AI Enterprise includes an inference engine called Triton designed to deploy the models in production.

“All of that is packaged together for the first time into enterprise-grade, fully supported, certified, generally available to all customers world-wide,” Das said.

Nvidia started working with VMware about a year ago, Das said. By running AI Enterprise atop Vmware vSphere, which Das dubbed “the defacto operating system” of the enterprise center, it lowers the bar for enterprise customers to begin adopting AI.

“Almost every IT administrator in the world is familiar with VMware vSphere and how to deploy it,” Das said. “What this basically does is it creates for the enterprise customers a platform that they can just consume, instead of having to build.”

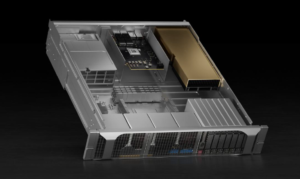

Prospective customers have a slew of certified servers they can choose to run AI Enterprise and vSphere. There are GPU-accelerated, 1U and 2U systems form Dell, HPE, Lenovo, and many other OEMs that have been certified to run the software. The idea is to bring AI-accelerated computing to the same servers that customers are using to run their line of business (LOB) applications, such as human resources or ERP applications, Das said. This helps to lower the bar of enterprise AI further, he said.

Nvidia AI Enterprise is pre-certified to run on vSphere running atop industry-standard servers from Dell, HPE, and more

“The same servers that have been racked and stacked into private clouds and data centers today can now be utilized for AI, with a small amount of GPU added to the server–affordable, accessible, incremental cost,” Das said. The servers are certified to run a variety of GPUs from Nvidia, including A100, A30, A40, and A10 models.

Nvidia also unveiled a partnership with Domino Data Lab, which develops software designed to streaming the data science workflow. According to Das, Domino Data Lab fills a need for automating various machine learning operations, or MLops.

The way AI typically is done, Das said, is researchers in R&D continuously are tweaking their models. They’re re-training them on a daily basis, and deploying them frequently in the hopes of improving their predictive ability

“Not quite good enough for enterprise IT,” Das said. “Domino Data Lab has an end-to-end system that solves that problem. It [provides] for reproducibility of the same training against the same data set. It has various capabilities for governance, so that IT can understand where models come from, what models should be deployed where.”

Customers can deploy AI Enterprise atop VMware vSphere, and get the benefits described above. They also now have the option to add Domino Data Lab into the mix, to gain better visibility into the end-to-end workflow, he said.

This article first appeared on sister website Datanami.

Related

Alex Woodie has written about IT as a technology journalist for more than a decade. He brings extensive experience from the IBM midrange marketplace, including topics such as servers, ERP applications, programming, databases, security, high availability, storage, business intelligence, cloud, and mobile enablement. He resides in the San Diego area.