An Early Look at How the Metaverse Will Feed AI Models

The concept of metaverse has many definitions: a parallel universe with digital versions of ourselves and the world, the 3D web to succeed today's two-dimensional web, and a graphical interface for predictive analytics and to collaborate on product design.

The visual world has many moving parts that unites many data types, interfaces, and AI models. The 3D volumetric interface packs many data types that include spatial and time dependencies elements, which are important in capturing and analyzing past trends, which keys projections for the future.

Visual simulation has been used in significant projects like DeepMind’s AlphaFold AI research project, which can predict 3D structures of over 200 million known proteins. Protein folding is fundamental to drug discovery, and AlphaFold was used in the research for Covid-19 treatment. In the world of high-performance computing, the metaverse has provisions for researchers to collaborate in virtual simulations.

One of the metaverse’s biggest champions, Nvidia has hyped the concept through a product called Omniverse, which is a set of AI, software and visual technologies for research and scientific modeling. But Nvidia may be distancing itself from the term “metaverse.” After an earful of “metaverse” in press releases and marketing material over the last few years, Nvidia didn’t use the term in a press briefing ahead of the Supercomputing 2022 show in November.

The graphics chip company has been vague about Omniverse, but clarified some of its inner workings this week. The platform uses a complex set of technologies to collect, collate, translate and correlate data, which is ultimately gathered into data sets. Artificial intelligence models applied on those data sets, which then provides the visual models to scientific applications, which could include understanding planetary trends or discovering drugs.

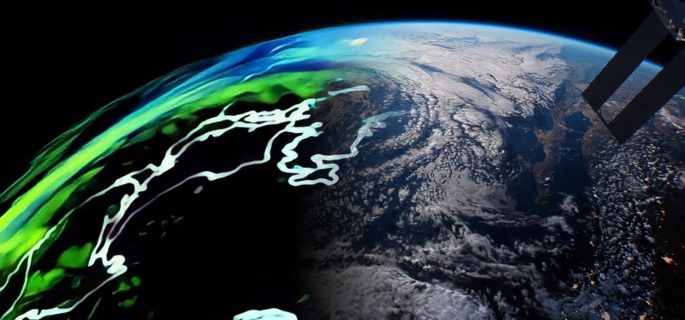

The U.S. National Oceanic and Atmospheric Administration will use Omniverse and technology from Lockheed Martin to visualize data of climate and weather trends, which will then be made available to researchers for forecasting and other studies. The partnership, announced at Supercomputing 2022, provides a glimpse into how data distribution, collaboration and artificial intelligence would work in metaverse-style computing.

Lockheed Martin’s OR3D platform collects information important to visualizing weather and climate data, including data from satellites, ocean, prior atmospheric trends, and sensors. That data, which is in specific OR3D file formats, will be built into “connectors,” which will transform the data into file types based on the USD (Universal Scene Description) format.

The USD file format has operators that combine data such as positioning, orientation, colors, materials and layers in a 3D file. The conversion to USD file format is important as it makes the visual file shareable so multiple users can collaborate, which is an important consideration in virtual worlds. The USD file is also a translator that breaks down the different types of data in OR3D files into raw input for AI models.

The data types could include temporal and spatial elements in the 3D images, which is especially important in visualizing climate and weather data. For example, the past weather trends need to be captured by the second or minute, and need to be mapped based on time dependencies.

An Nvidia tool called Nucleus, which is the main engine for Omniverse, converts the OR3D files to USD and handles the runtime, physical simulation and mapping of data from other file formats.

The datasets for AI could include real-time update weather data, which can then be streamed into AI models. Nvidia’s multi-step process to feed raw image data into USD is complex but scalable, and it can account for multiple data types. It is viewed as more viable than connectors called APIs, which is specific to applications and is not scalable for different data types in a single complex model.

The USD file format has the upside of handling different types of data collected from satellites and sensors in real time, which helps build more accurate AI models. USD files can also be shared, which makes the data extensible to other applications.

Header image: Depiction of digital twin of global weather and climate conditions for National Oceanic and Atmospheric Administration. Credit: Nvidia.