OpenAI’s New GPT-3.5 Chatbot Can Rhyme like Snoop Dogg

While the world waits for GPT-4, the next version of the generative transformer model GPT-3, OpenAI has released GPT-3.5 in the form of a new AI chatbot, ChatGPT, that has already seen over a million users less than a week after launch.

ChatGPT is a fine-tuned version of GPT-3.5, an update that the company had not previously announced. The chatbot debuted in a public demonstration last week that showed its capabilities for generating text in a dialog format, which the company says enables it to answer follow-up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. The model is a sibling of InstructGPT, a fine-tuned GPT-3 model trained to follow an instruction prompt and provide a detailed response.

Use cases for ChatGPT include digital content creation, writing and debugging code, and answering customer service queries. OpenAI calls GPT-3.5 a series of models trained on a blend of text and code from before Q4 2021. Rather than releasing a fully trained GPT-3.5, the company has created this series of models for distinct tasks. ChatGPT is based on text-davinci-003, which the company says is an improvement on GPT-3’s text-davinci-002.

The model was trained using Reinforcement Learning from Human Feedback (RLHF) using the same methods as InstructGPT, but with differences in how data was collected: “We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses,” OpenAI said in a blog post. “To create a reward model for reinforcement learning, we needed to collect comparison data, which consisted of two or more model responses ranked by quality.” To collect this data, the researchers used conversations between AI trainers and the chatbot to randomly select a model-written message while sampling various alternative completions to then have the AI trainers rank them for quality.

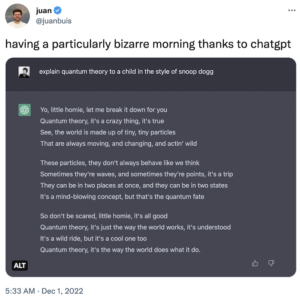

ChatGPT seems to understand word and sentence structure and uses rhyming and syllables to create songs and poems that have left the internet abuzz with examples of ChatGPT’s writing prowess. For those who have been recently enjoying Snoop Dogg’s children’s show, “Doggyland,” and its charming way of breaking down complex topics, this Twitter user prompted ChatGPT to explain quantum theory to a child in Snoop’s signature style:

(Source: Twitter)

While it’s not as catchy as the rapper’s “Affirmation Song,” (seriously, if you have not started your day with that song yet, give it a try), this generated song shows how creatively the model blends general information with a particular style of songwriting.

While some may be worried about out-of-work writers, or the end of essay writing in schools, there is still work to be done before an AI steals anyone’s job or lesson plans. Along with details of the new model, OpenAI also listed its limitations. The researchers noted that the model sometimes gives answers that could sound plausible but are incorrect or nonsensical. ChatGPT also unevenly responds to slight changes in input phrasing, claiming ignorance when asked one question, but answering correctly when the question is subtly rephrased. The model is also too wordy at times and overuses some phrases, which OpenAI says can be a result of training data biases where trainers prefer longer and more comprehensive answers. Sometimes the model guesses a user’s intent when giving a response to an ambiguous question, and the researchers say that ideally it should ask clarifying questions instead.

This shows how the Reinforcement Learning from Human Feedback training was conducted. Click to enlarge. (Source: OpenAI)

Perhaps the largest limitation is that, even though OpenAI has trained the model to refuse inappropriate requests, ChatGPT still may respond to harmful instructions or exhibit biased behavior, say its creators. OpenAI’s Moderation API helps warn or block certain content deemed to be unsafe, but the company admits it may incur false negatives or positives until enough user feedback is collected to improve it.

To address these limitations, the company says it plans to make regular model updates while collecting user feedback on problematic model outputs with a particular focus on harmful outputs that “could occur in real-world, non-adversarial conditions, as well as feedback that helps us uncover and understand novel risks and possible mitigations.” To that end, the company also announced it is holding a ChatGPT Feedback Contest with a prize of $500 in API credit up for grabs.