Google’s Next TPU Looms Large as Company Wagers Future on AI

Google is hedging its future on artificial intelligence, and is swinging at the fences to prove it is up to speed with rival Microsoft, which has unexpectedly emerged as a frontrunner.

Google CEO Sundar Pichai managed to create excitement around its AI technologies at the recent IO conference. But many questions were left unanswered on the hardware that will drive the next-generation AI, and Google’s future.

CEO Sundar Pichai's keynote at Google IO was carefully curated, and the presentations gave life to how Google plans to embed AI to make its products smarter and easy for users. For example, Google Docs can use AI to assist users in creating documents – such as cover letters – and save time.

But Google did not match up to Microsoft on one thing: hyping up the hardware it is using to drive its AI future.

The hardware and infrastructure driving AI are becoming an important part of the conversation, but Google shared no details on its next TPU or other hardware that is training its upcoming generative AI technologies.

Pichai spent a few minutes talking about its upcoming foundational models, including Gemini, which will drive its product offerings including search.

"Gemini was created from the ground up to be multimodal, highly efficient and ... built to enable future innovations. While still early, we are already seeing impressive multimodal capabilities not seen in prior models," Pichai said during the keynote.

Gemini is still in training, Pichai said. But company representatives declined to comment on whether the company's next-generation AI chip – called TPU v5 – was in operation and being used to train Gemini.

The Gemini model will come in various sizes and can be adapted for different applications, much like PaLM and PaLM-2. But the training of Gemini could take time.

DeepMind has developed technologies targeting verticals, including models for nuclear energy and protein folding, and is targeting artificial general intelligence, a form of an all-purpose digital brain that can answer all questions. It is not clear if Gemini would compete with GPT-4 or GPT-5.

Google's silence on its hardware contrasts with Microsoft, which has been talking up the cost-per-transaction and response time of its Azure supercomputer, which is based on Nvidia's GPUs.

Gemini is expected to succeed PaLM-2, which was also announced at Google IO. The PaLM-2 model is an upgrade over the previous PaLM model that was used for Bard, its first AI chatbot used in its experimental search product. The Bard chatbot based on PaLM-2 is in beta and was opened to users in 180 countries.

PaLM-2, which was detailed in a research paper released this month, talks about fine-tuning the model so it is less toxic and more accurate in reasoning, providing answers, and coding. The model was trained on TPU-v4 hardware, according to the research paper.

The ability to release AI technologies faster and quicker responses to user queries is related to hardware capabilities. Microsoft is jumping quickly over to Nvidia's H100 GPUs after implementing OpenAI's GPT-4 technology in its search and productivity applications.

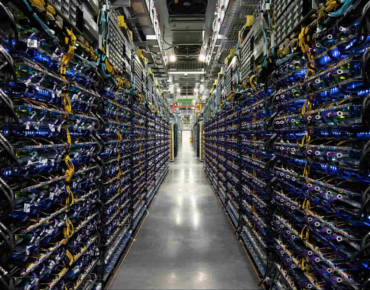

Google has turned to its homegrown Tensor Processing Units to train its generative AI models. Google’s last AI chip, TPU v4, was officially released in 2021, though it had been testing for a few years before that.

The company has TPU v4 supercomputers with 4,096 of the company’s AI chips. Google claims that the supercomputer is the first with a circuit-switched optical interconnect, and the company has deployed hundreds of TPU v4 supercomputers in its cloud service.

At the recent ISC tradeshow in Hamburg, keynote speaker Dan Reed shared an interesting fact: it was possible that training GPT-4 could have taken a year or more of sustained exascale-class computing.

"Think about any computational model we would run that we could justify devoting a calendar year to running that...you can make money doing that. That is what is driving it. It is also driving what is happening in the innovation space around the underlying technology," said Reed, who is the presidential professor for computer science at University of Utah.

The innovation is in the hands of the few with the best AI hardware, including Google, Amazon, and Microsoft, Reed said.

It is not yet clear what hardware was used to train GPT-4, but based on the timing, it was likely on the Azure supercomputer with Nvidia's A100 and H100 GPUs.

Google's AI applications are mostly tuned for execution on TPUs. But Google added AI hardware diversity with the announcement of the A3 supercomputer, which will host up to 26,000 Nvidia H100 GPUs. The offering is targeted at companies using Nvidia GPUs in their AI infrastructure. Nvidia's CUDA software stack dominates AI workloads.

Google did not share specifications on Gemini, but it is likely being trained on TPU-v4, said Dylan Patel, chief analyst at SemiAnalysis, a boutique consulting firm.

It could take under a year to train the model, Patel said.

"They didn't give parameters or tokens, but based on some extrapolation and speculation, likely a couple of months," Patel said.

TPU v5 will ramp this year, Patel said.

"They increased this number to 1,024 with TPUv3 and to 4,096 with TPUv4. We would assume that the current generation TPUv5 can scale up to 16,384 chips without going through inefficient ethernet based on the trendline," authors at SemiAnalysis said in a newsletter entry.

There have been hints of TPU v5 in the wild, but it has been mired in controversy.

Researchers at Google informally announced (via Twitter) the existence of TPU-v5 in June 2021 and published a paper in journal Nature on how AI was used to design the chip.

Google claimed that AI agents can be trained to floor-plan chips, which involves placing various blocks or modules at the best place on a chip. The agent learns over time and can place previously unseen modules on a chip.

"Our methods generate placements that are superior or comparable to human expert chip designers in under 6 hours, whereas the highest-performing alternatives require human experts in the loop and take several weeks for each of the dozens of blocks in a modern chip," Google said in the paper.

But academic researchers were not amused by Google's claims. Google came under fire for keeping its research closed, and not opening it up for public scrutiny. The company ultimately placed limited amounts of information on Github.

A researcher, Andrew B. Kahng, from University of California, San Diego, tried to reverse engineer Google's chip-design techniques, and found that in some cases, human chip designers and automated tools could be faster Google's AI-only technique. He presented a paper on his findings at the International Symposium on Physical Design in March.

The AI product announcements at the IO conference fit into Google's overall message of responsible use of AI, which was a big part of Sundar Pichai's keynote. But the message of safe AI was quickly forgotten after executives on stage showed off the cool Google Pixel smartphones and tablets.

But executives mentioned they had a lot more at stake to get AI right, especially with AI under the microscope of users and governments. The important thing was to get AI right, and not to hurry it out, which is what Microsoft did.

For example, a program called Universal Translator can dub the voice of a person speaking in a video into another language, and modify the lip sync to show as if the person was speaking the language natively. However, there are also concerns that the technology could be used to create deepfakes, so Google is preventing misuse by sharing the technology with a limited number of vetted partners.

The company also showed multimodal AI capabilities, which is an emerging technology. AI tools largely specialize in only one thing – ChatGPT excels in text-to-text responses, while Dall-E specializes in text-to-image. Multimodal AI unifies chat, video, speech, and images in a single AI model. Gemini will merge multimodal capabilities into a universal model.

Google IO's second keynote of the day, which was targeted at developers, talked about technologies like WebGPU, which was considered a protocol to accelerate AI on desktops. WebGPU, which is built into the browser, will use local hardware resources to accelerate AI on PCs and smartphones. It is considered a successor to WebGL, which is used for graphics.