Nvidia Releasing Open-Source Optimized Tensor RT-LLM Runtime with Commercial Foundational AI Models to Follow Later This Year

(thodonal88/Shutterstock)

Nvidia's large-language models will become generally available later this year, the company confirmed.

Organizations widely rely on Nvidia's graphics processors to write AI applications. The company has also created proprietary pre-trained models similar to OpenAI's GPT-4 and Google's PaLM-2.

Customers can use their own corpus of data, embed it in Nvidia's pre-trained large language models, and build their own AI applications. The foundational models cover text, speech, images, and other forms of data.

Nvidia has three foundational models. The most publicized is NeMo, which includes Megatron, in which customers can build ChatGPT-style chatbots. NeMo also has TTS, which converts text to human speech.

The second model, BioNemo, is a large-language model targeted at the biotech industry. Nvidia's third AI model is Picasso, which can manipulate images and videos. Customers will be able to engage Nvidia's foundational models through software and services products from the company and its partners.

"We'll be offering our foundational model services a little later this year," said Dave Salvator, a product marketing director at Nvidia, during a conference call. Nvidia's spokeswoman was not specific on availability dates for particular models.

The NeMo and BioNeMo services are currently in early access to customers via the AI Enterprise software and will likely be the first ones available commercially. Picasso is still further out from release, and services around the model may not become available as quickly.

"We are currently working with select customers, and others interested can sign up to get notified for when the service opens up more broadly," an Nvidia spokeswoman said.

The models will run best on Nvidia's GPUs, which are in short supply. The company is working to meet the demand, said Nvidia CFO Colette Kress at the recent Citi Global Technology Conference this week.

The GPU shortage creates a barrier to adoption, but customers can access Nvidia's software and services through the company's DGX Cloud or through Amazon Web Services, Google Cloud, Microsoft Azure, or Oracle Cloud, which have H100 installations.

Nvidia's foundational models are important ingredients in the company's concept of an "AI factory," in which customers do not have to worry about coding or hardware. An AI factory can take in raw data and churn it through GPUs and LLMs. The output is actionable data for companies.

The LLMs will be part of the AI Enterprise software suite, which includes frameworks, foundation models, and other AI technologies. The technology stack also includes tools like Tao, which is a no-code AI programming environment, and NeMo Guardrails, which can analyze and redirect output to provide more reliability on responses.

Nvidia is relying on its partners to sell and help companies deploy AI models such as NeMo to its accelerated computing platform.

Some Nvidia partners include software companies Snowflake and VMware and AI service providers Huggingface. Nvidia has also partnered with consulting company Deloitte for larger deployments. Nvidia has already announced it will bring its NeMo LLM to Snowflake Data Cloud, on which top organizations deposit data. Snowflake Data Cloud users will be able to generate AI-related insights and create AI applications by connecting their data to NeMo and Nvidia's GPUs.

The partnership with VMware brings the AI Enterprise software to VMware Private Cloud. VMware's vSphere and Cloud Foundation platforms provide administrative and management tools for AI deployments in virtual machines across Nvidia's hardware in the cloud. The deployments can also extend to non-Nvidia CPUs.

Nvidia is about 80% a software company, and its software platform is the operating system for AI, said Manuvir Das, vice president for enterprise computing at the company during Goldman Sachs' Communacopia+Technology conference.

Last year, people were still wondering how AI would help, but this year, "customers come to see us now as they already know what the use case is," Das said. The barrier to entry for AI remains high, and the challenge has been in the development of foundational models such as NeMo, GPT-4, or Meta's Llama 2.

"You have to find all the data, the right data, you have to curate it. You have to go through this whole training process before you get a usable model," Das said.

But after millions in investments for development and training, the models are now becoming available to customers.

"Now they're ready to use. You start from there, you finetune with your own data, and you use the model," Das said.

Nvidia has projected a $150 billion market opportunity for the AI Enterprise software stack, which is half that of the $300 billion hardware opportunity, which includes GPUs and systems. The company's CEO, Jensen Huang, has previously talked about AI computing being a radical shift from the old style of computing reliant on CPUs.

Open-Source Tensor-RT LLM

Nvidia separately announced Tensor-RT LLM, which improves the inferencing performance of foundational models on its GPUs. The runtime can extract the best inferencing performance of a wide range of models such as Bloom, Falcon, and Meta's latest Llama models.

A heavy-duty H100 is considered the best for training models but may be overkill for inferencing when factoring in the power and performance of the GPU. Nvidia has the lower-power L40s and L4 GPUs for inferencing but is making the H100 viable for inference if the GPUs are not busy.

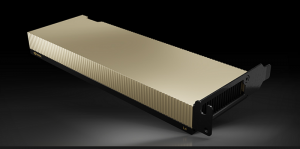

Nvidia low power L4 GPU

The Tensor-RT LLM is specially optimized for low-level inferencing on H100 to reduce idle time and keep the GPU occupied at close to 100%, said Ian Buck, vice president of hyperscale and HPC at Nvidia.

Buck said that the combination of Hopper and Tensor-RT LLM software improved inference performance by eight times compared to the A100 GPU.

"As people develop new large language models, these kernels can be reused to continue to optimize and improve performance and build new models. As the community implements new techniques, we will continue to place them … into this open-source repository," Buck continued.

Tensor RT-LLM has a new kind of scheduler for the GPU, which is called inflight batching. The scheduler allows work to enter and exit the GPU independently of other tasks.

"In the past, batching came in as work requests. The batch was scheduled onto a GPU or processor, and then when that entire batch was completed … the next batch would come in. Unfortunately, in high variability workloads, that would be the longest workload ... and we often see GPUs and other things be underutilized," Buck said.

With Tensor-RT LLM and in-flight batching, work can enter and leave the batch independently and asynchronously to keep the GPU 100% occupied.

"This all happens automatically inside the Tensor RT-LLM runtime system and it dramatically improves H100 efficiency," Buck said.

The runtime is in early access now and will likely be released next month.