MLPerf Training 4.0 – Nvidia Still King; Power and LLM Fine Tuning Added

There are really two stories packaged in the most recent MLPerf Training 4.0 results, released today. The first, of course, is the results. Nvidia (currently king of accelerated computing) wins again, sweeping all nine “events” (workflows) as it were. Its lead remains formidable. Story number two, perhaps more important, is MLPerf itself. It has matured through years to become a far more useful broad tool to evaluate competing ML-centric systems and operating environments.

Bottom line, as a handicapping tool for GPUs and their like, MLPerf is perhaps less than first intended because there are few contenders, but quite useful as a tool for prospective buyers to evaluate systems and for developers to assess rival systems. MLPerf has increasingly becoming a standard item on their checklists. (We’ll skip the v. slight confusion from mixing MLPerf’s parent entity’s name, MLCommons, with MLPerf, the name of the portfolio of benchmark suites).

Bottom line, as a handicapping tool for GPUs and their like, MLPerf is perhaps less than first intended because there are few contenders, but quite useful as a tool for prospective buyers to evaluate systems and for developers to assess rival systems. MLPerf has increasingly becoming a standard item on their checklists. (We’ll skip the v. slight confusion from mixing MLPerf’s parent entity’s name, MLCommons, with MLPerf, the name of the portfolio of benchmark suites).

The latest MLPerf Training exercise adds two new benchmarks — LoRA fine-tuning of LLama 2 70B and GNN (graph neural network) — and power metrics (optional) were also added to training. There were more than 205 performance results from 17 submitting organizations: ASUSTeK, Dell, Fujitsu, Giga Computing, Google, HPE, Intel (Habana Labs), Juniper Networks, Lenovo, NVIDIA, NVIDIA + CoreWeave, Oracle, Quanta Cloud Technology, Red Hat + Supermicro, Supermicro, Sustainable Metal Cloud (SMC), and tiny corp.

While Nvidia GPUs again dominated, Intel’s Habana Gaudi2 accelerator, Google TPU v-5P, and AMD’s Radeon RX 7900 XTX GPU (first-time participant) all had strong showings.

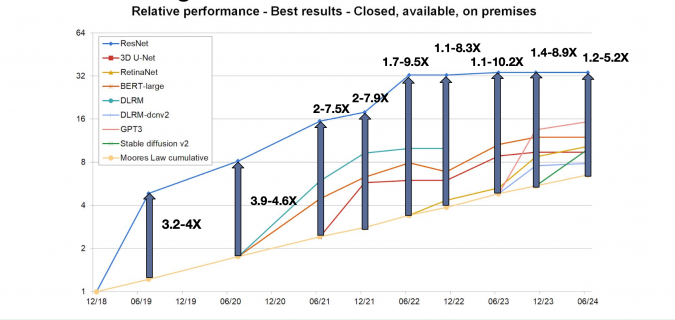

As shown above, MLCommons has steadily grown its portfolio of benchmarks. Training, introduced in 2018, was the first and is generally regarded as the most computationally intense. David Kanter emphasized the improving performance of training submission, crediting the MLPerf exercises with helping drive gains.

“[MLPerf], by bringing the whole community together, focuses us on what’s important. And this is a slide that shows the benefits. [It] is an illustration of Moore’s law — that is the yellow line at the bottom. On the what x axis is time, and on the y axis is relative performance. This is normalized performance for the best results on each benchmark in MLPerf, and how it improves over time,” said Kanter at a media/analyst pre-briefing.

“What you can see (slide below) is that in many cases we’re delivering five or 10x better performance than Moore’s law. That means that not only are we taking advantage of better silicon, but we’re getting better architectures, better algorithms, better scaling, all of these things come together to give us dramatically better performance over time. If you look back in the rear view mirror, since we started, it’s about 50x better, slightly more, but even if you look at relative to the last cycle, some of our benchmarks got nearly 2x better performance, in particular Stable Diffusion. So that’s pretty impressive in six months.”

Adding graph neural network and fine tuning to the training exercise were natural steps. Ritika Borkar and Hiwot Kassa, MLPerf Training working group co-chairs, walked through the new workflows.

Borkar said, “There’s a large class of data in the world which can be represented in the form of graphs — a collection of nodes and edges connecting the different nodes. For example, social networks, molecules, database, and log pages, and GNN, or graph neural networks, is the class of networks that are used to encapsulate information from such graph structured data, and as a result, you see GNNs in a wide range of commercial applications, such as recommender systems or ads fraud detection or drug discovery or doing graph answering or knowledge graphs.

“An example noted here is Alibaba Taobao recommendation system, which is based on a GNN network; it uses user behavior graph to which is of the magnitude of billions of vertices and edges. So as you can imagine, when you have to when a system has to work with graphs of this large magnitude, there are interesting performance characteristics that that are demanded from the system. And on that spirit, we wanted to include this kind of challenge in the developer benchmark suit.”

With regard to fine tuning LLMs, Kassa said, “We can divide the state of training LLM, at a high level, into two states. One is free training, where LLMs are trained on large unlabeled data for general purpose language understanding. This can take days to months to train and is computationally intensive. [The] GPT-3 benchmark and MLPerf training shows this phase, so we have that part already covered. The next phase is fine tuning, [which] is where we’re taking pre trained model and we’re training it with specific task, specific labeled data sets to enhance its accuracy on specific tasks, like, text summarization on specific topics. In fine tuning, we’re using less compute or memory resource [and] we have less cost for training. It’s becoming widely accessible and used in large range of AI users, and adding it to MLPerf is important and timely right now.

“When selecting fine tuning techniques we considered a number of techniques, and we selected parameter efficient fine tuning, which is a fine tuning technique that trains or tunes only a subset of the overall model parameters (PEFT); this significantly reduced training time and computational efficiency compared to the traditional fine tuning techniques that tunes all parameters. And from the PEFT method, we selected LoRA (low-rank adaption) that enables training of dense layers through rank decomposition matrix while maintaining the pre-trained weights frozen. This technique significantly reduced hardware requirement, memory usage, storage while still being performant compared to a fully fine tuned models,” she said.

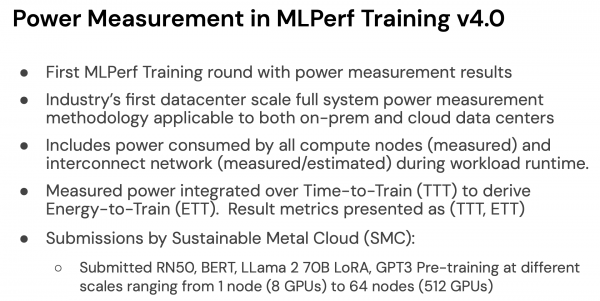

Inclusion of the power metric in training was also new and now widely used yet, but given current concerns around energy use by AI technology and datacenters generally, its importance seems likely to grow.

As always digging out meaningful results from MLPerf training a painstaking effort in that system configurations vary widely and as does performance across the different workflows. Actually, developing an easier way to accomplish this might be a useful addition to MLPerf presentation arsenal, though perhaps unlikely as MLCommons is unlikely to want to spotlight better performers and antagonize lesser performers. Still, it’s perhaps a worthwhile goal. Here’s a link to the Training 4.0 results.

Per usual practice, MLCommons invites participants to submit brief statements intended to spotlight features that improve performance on the MLPerf benchmarks. So do this well while others are no more than marketing info. Those statements are appended to this article and worth scanning.

Nvidia was again the dominant winner in terms of accelerator performance. This is an old refrain. Intel (Habana, Gaudi2), AMD, and Google all had entries. Intel’s Gaudi3 is expected to available in the fall and the company said it plans to enter it in the fall MLPerf Inference benchmark.

Here are brief excerpts from three submitted statements:

Intel — “Training and fine-tuning results show competitive Intel Gaudi accelerator performance at both ends of the training and fine-tuning spectrum. The v4.0 benchmark features time-to-train (TTT) of a representative 1% slice of the GPT-3 model, a valuable measurement for assessing training performance on a very large 175B parameter model. Intel submitted results for GPT-3 training for time-to-train on 1024 Intel Gaudi accelerators, the largest cluster result to be submitted by Intel to date, with TTT of 66.9 minutes, demonstrating strong Gaudi 2 scaling performance on ultra-large LLMs.

“The benchmark also features a new training measurement: fine-tuning a Llama 2 model with 70B parameters. Fine-tuning LLMs is a common task for many customers and AI practitioners, making it a highlight relevant benchmark for everyday applications. Intel’s submission achieved a time-to-train of 78.1 minutes using 8 Intel Gaudi 2 accelerators. The submission leverages Zero-3 from DeepSpeed for optimizing memory efficiency and scaling during large model training, as well as Flash-Attention-2 to accelerate attention mechanisms.”

Juniper Networks — “For MLPerf Training v4.0, Juniper submitted benchmarks for BERT, DLRM, and Llama2-70B with LoRA fine tuning on a Juniper AI Cluster consisting of Nvidia A100 and H100 GPUs using Juniper’s AI Optimized Ethernet fabric as the accelerator interconnect. For BERT, we optimized pre-training tasks using a Wikipedia dataset, evaluating performance with MLM accuracy. Our DLRM submission utilized the Criteo dataset and HugeCTR for efficient handling of sparse and dense features, with AUC as the evaluation metric, achieving exceptional performance. The Llama2-70B model was fine-tuned using LoRA techniques with DeepSpeed and Hugging Face Accelerate, optimizing gradient accumulation for balanced training speed and accuracy.

“Most submissions were made on a multi-node setup, with PyTorch, DeepSpeed, and HugeCTR optimizations. Crucially, we optimized inter-node communication with RoCE v2, ensuring low-latency, high-bandwidth data transfers, which are critical for efficient, distributed training workloads.”

Google — “Cloud TPU v5p exhibits near-linear scaling performance (approximately 99.9% scaling efficiency) on the GPT-3 175b model pre-training task, ranging from 512 to 6144 chips. Previously, in the MLPerf Training v3.1 submission for the same task, we demonstrated horizontal scaling capabilities of TPU v5e across 16 pods (4096 chips) connected over a data center network (across multiple ICI domains). In this submission, we are showcasing the scaling to 6144 TPU v5p chips podslice (within a single ICI domain). For a comparable compute scale (1536 TPU v5p chips versus 4096 TPU v5e chips), this submission also shows an approximate 31% improvement in efficiency (measured as model flops utilization).

“This submission also showcases Google/MaxText, Google Cloud’s reference implementation for large language models. The training was done using Int8 mixed precision, leveraging Accurate Quantized Training. Near-linear scaling efficiency demonstrated across 512, 1024, 1536, and 6144 TPU v5p slices is an outcome of optimizations from codesign across the hardware, runtime & compiler (XLA), and framework (JAX). We hope that this work will reinforce the message of efficiency which translates to performance per dollar for large-scale training workloads.”

Back to Nvidia, last but hardly least. David Salvator, director of accelerated computing products, led a separate briefing on Nvidia’s latest MLPerf showing and perhaps a little chest-thumping is justified.

“So there are nine workloads in MLPerf and we’ve set new records on five of those nine workloads, which you see sort of across the top row (slide below). There a couple of these are actually brand new workloads, the graph, neural network, as well as the LLM fine tuning workloads, are net new workloads to this version of MLPerf. But in addition, we are constantly optimizing and tuning our software, and we are actually publishing our containerized software to the community on about a monthly cadence,” said Salvator.

“In addition to the two new models where we set new records, we’ve even improved our performance on three of the existing models, which you see on kind of the right hand side. We also have standing records on the additional four workloads, so we basically hold records across all nine workloads of MLPerf training. And this is just an absolute tip of the hat and [to] our engineering teams to continually improve performance and get more performance from our existing architectures. These were all achieved on the hopper architecture right.”

“About a year ago, right, we did a submission at about 3500 GPUs. That was with the software we had at the time. This is sort of a historical compare. If you fast forward to today, on a most recent submission of 11,616 GPUs, which is the biggest at scale submission we’ve ever done. What you see is that we’ve just about tripled the results, plus a little. Here’s what’s interesting about that. If you actually do the math on 1116, divided by 3584 you’ll see that it’s about 3.2x so what that means is we are getting essentially linear scaling right now. A lot of times with workloads, as you go to much larger scales, if you can get 65%-to-70% scaling efficiency, you’re pretty happy if you get 80% scaling efficiency. What we’ve been able to do through a combination of more hardware but also a lot of software tuning to get linear scaling on this workload. It’s very rare for this to happen,” said Salvator.

Salvator has also posted a blog on the latest results. It’s best to dig into the specific results to ferret useful insight for your particular purposes.

Link to MLPerf Training 4.0 results, https://mlcommons.org/benchmarks/training/

MLPerf 4.0 Submitted Statement by Vendors

The submitting organizations provided the following descriptions as a supplement to help the public understand their MLPerf Training v4.0 submissions and results. The statements do not reflect the opinions or views of MLCommons.

Asus

ASUS, a global leader in high-performance computing solutions, proudly announces its collaboration with MLPerf, the industry-standard benchmark for machine learning performance, to demonstrate the exceptional capabilities of its ESC-N8A and ESC8000A-E12 servers in the MLPerf Training v4.0 benchmarks.

The collaboration highlights ASUS’s commitment to advancing AI and machine learning technologies. The ESC-N8A and ESC8000A-E12 servers, equipped with cutting-edge hardware, have showcased remarkable performance and efficiency in the rigorous MLPerf Training v4.0 evaluations.

This collaboration with MLPerf reinforces ASUS’s role as a pioneer in AI and machine learning innovation. By continually pushing the boundaries of what is possible, ASUS aims to empower researchers, data scientists, and enterprises with the tools they need to drive technological advancements and achieve breakthrough results.

Partnering with MLPerf allows us to validate our servers’ capabilities in the most demanding AI benchmarks. The outstanding results achieved by the ESC-N8A and ESC8000A-E12 servers in MLPerf Training v4.0 highlight our commitment to delivering high-performance, scalable, and efficient solutions for AI workloads

Dell Technologies

Dell Technologies continues to accelerate the AI revolution by creating the industry’s first AI Factory with NVIDIA. At the heart of this factory is the continued commitment to advancing AI workloads. MLPerf submissions serve as a testament to Dell’s commitment to helping customers make informed decisions. In the MLPerf v4.0 Training Benchmark submissions, Dell PowerEdge servers showed excellent performance.

Dell submitted two new models, including Llama 2 and Graph Neural Networks. The Dell PowerEdge XE9680 server with 8 NVIDIA H100 Tensor Core GPUs continued to deliver Dell’s best performance results.

The Dell PowerEdge XE8640 server with four NVIDIA H100 GPUs and its direct liquid-cooled (DLC) sibling, the Dell PowerEdge XE9640, also performed very well. The XE8640 and XE9640 servers are ideal for applications requiring a balanced ratio of fourth-generation Intel Xeon Scalable CPUs to SXM or OAM GPU cores. The PowerEdge XE9640 was purpose-built for high-efficiency DLC, reducing the four-GPU server profile to a dense 2RU form factor, yielding maximum GPU core density per rack.

The Dell PowerEdge R760xa server was also tested, using four L40S GPUs and ranking high in performance for training these models. The L40S GPUs are PCIe-based and power efficient. The R760xa is a mainstream 2RU server with optimized power and airflow for PCIe GPU density.

Generate higher quality, faster time-to-value predictions, and outputs while accelerating decision-making with powerful solutions from Dell Technologies. Come and take a test drive in one of our worldwide Customer Solution or collaborate with us using one of our innovation labs to tap into one of our Centers of Excellence.

Fujitsu

Fujitsu offers a fantastic blend of systems, solutions, and expertise to guarantee maximum productivity, efficiency, and flexibility delivering confidence and reliability. Since 2020, we have been actively participating in and submitting to inference and training rounds for both data center and edge divisions.

In this round, we submitted benchmark results with two systems. The first is PRIMERGY CDI, equipped with 16 L40S GPUs in external PCIe-BOXes, and the second is PRIMERGY GX2560M7, equipped with four H100 SXM GPUs inside the server. The PRIMERGY CDI can accommodate up to 20 GPUs in three external PCI-BOXes as a single node server and can share the resources among multiple nodes. Additionally, the system configuration can be adjusted according to the size of training and inference workloads. Measurement results are displayed in the figure below. In image-related benchmarks, PRIMERGY CDI dominated, while PRIMERGY GX2560K7 excelled in language-related benchmarks.

Our purpose is to make the world more sustainable by building trust in society through innovation. With a rich heritage of driving innovation and expertise, we are dedicated to contributing to the growth of society and our valued customers. Therefore, we will continue to meet the demands of our customers and strive to provide attractive server systems through the activities of MLCommons.

Giga Computing

The MLPerf Training benchmark submitter – Giga Computing – is a GIGABYTE subsidiary that made up GIGABYTE’s enterprise division that designs, manufactures, and sells GIGABYTE server products.

The GIGABYTE brand has been recognized as an industry leader in HPC & AI servers and has a wealth of experience in developing hardware for all data center needs, while working alongside technology partners: NVIDIA, AMD, Ampere Computing, Intel, and Qualcomm.

In 2020, GIGABYTE joined MLCommons and submitted its first system. And with this round of the latest benchmarks, MLPerf Training v4.0 (closed division), the submitted GIGABYTE G593 Series platform has shown its versatility in supporting both AMD EPYC and Intel Xeon processors. The performance is in the pudding, and these benchmarks (v3.1 and v4.0) exemplify the impressive performance that is possible in the G593 series. Additionally, greater compute density and rack density are also a part of the G593 design that has been thermally optimized in a 5U form factor.

- ● GIGABYTE G593-SD1: dense accelerated computing in a 5U server o 2x Intel Xeon 8480+ CPUs

o 8x NVIDIA SXM H100 GPUs

o Optimized for baseboard GPUs - ● Benchmark frameworks: Mxnet, PyTorch, dgl, hugectr

To learn more about our solutions, visit: https://www.gigabyte.com/Enterprise

Giga Computing’s website is still being rolled out: https://www.gigacomputing.com/

Google Cloud

In the MLPerf Training version 4.0 training submission, we are pleased to present Google Cloud TPU v5p, our most scalable TPU in production.

Cloud TPU v5p exhibits near-linear scaling performance (approximately 99.9% scaling efficiency) on the GPT-3 175b model pre-training task, ranging from 512 to 6144 chips. Previously, in the MLPerf Training v3.1 submission for the same task, we demonstrated horizontal scaling capabilities of TPU v5e across 16 pods (4096 chips) connected over a data center network (across multiple ICI domains). In this submission, we are showcasing the scaling to 6144 TPU v5p chips podslice (within a single ICI domain). For a comparable compute scale (1536 TPU v5p chips versus 4096 TPU v5e chips), this submission also shows an approximate 31% improvement in efficiency (measured as model flops utilization).

This submission also showcases Google/MaxText, Google Cloud’s reference implementation for large language models. The training was done using Int8 mixed precision, leveraging Accurate Quantized Training. Near-linear scaling efficiency demonstrated across 512, 1024, 1536, and 6144 TPU v5p slices is an outcome of optimizations from codesign across the hardware, runtime & compiler (XLA), and framework (JAX). We hope that this work will reinforce the message of efficiency which translates to performance per dollar for large-scale training workloads.

Hewlett Packard Enterprise

Hewlett Packard Enterprise (HPE) demonstrated strong inference performance in MLPerf Inference v4.0 along with strong AI model training and fine-tuning performance in MLPerf Training v4.0. Configurations this round featured an HPE Cray XD670 server with 8x NVIDIA H100 SXM 80GB Tensor Core GPUs and HPE ClusterStor parallel storage system as backend storage. HPE Cray systems combined with HPE ClusterStor are the perfect choice to power data-intensive workloads like AI model training and fine-tuning.

HPE’s results this round included single- and double-node configurations for on-premise deployments. HPE participated across three categories of AI model training: large language model (LLM) fine-tuning, natural language processing (NLP) training, and computer vision training. In all submitted categories and AI models, HPE Cray XD670 with NVIDIA H100 GPUs achieved the company’s fastest time-to-train performance to date for MLPerf on single- and double-node configurations. HPE also demonstrated exceptional performance compared to previous training submissions, which used NVIDIA A100 Tensor Core GPUs.

Based on our benchmark results, organizations can be confident in achieving strong performance when they deploy HPE Cray XD670 to power AI training and tuning workloads.

Intel (Habana Labs)

Intel is pleased to participate in the MLCommons latest benchmark, Training v4.0, submitting time-to-train results for GPT-3 training and Llama-70B fine-tuning with its Intel Gaudi 2 AI accelerators.

Training and fine-tuning results show competitive Intel Gaudi accelerator performance at both ends of the training and fine-tuning spectrum. The v4.0 benchmark features time-to-train (TTT) of a representative 1% slice of the GPT-3 model, a valuable measurement for assessing training performance on a very large 175B parameter model. Intel submitted results for GPT-3 training for time-to-train on 1024 Intel Gaudi accelerators, the largest cluster result to be submitted by Intel to date, with TTT of 66.9 minutes, demonstrating strong Gaudi 2 scaling performance on ultra-large LLMs.

The benchmark also features a new training measurement: fine-tuning a Llama 2 model with 70B parameters. Fine-tuning LLMs is a common task for many customers and AI practitioners, making it a highlight relevant benchmark for everyday applications. Intel’s submission achieved a time-to-train of 78.1 minutes using 8 Intel Gaudi 2 accelerators. The submission leverages Zero-3 from DeepSpeed for optimizing memory efficiency and scaling during large model training, as well as Flash-Attention-2 to accelerate attention mechanisms.

The benchmark task force – led by the engineering teams from Intel’s Habana Labs and Hugging Face, who also serve as the benchmark owners – are responsible for the reference code and benchmark rules.

The Intel team looks forward to submitting MLPerf results based on the Intel Gaudi 3 AI accelerator in the upcoming inference benchmark. Announced in April, solutions based on Intel Gaudi 3 accelerators will be generally available from OEMs in fall 2024.

Juniper Networks

Juniper is thrilled to collaborate with MLCommons to accelerate AI innovation and make data center infrastructure simpler, faster and more economical to deploy. Training AI models is a massive, parallel processing problem dependent on robust networking solutions. AI workloads have unique characteristics and present new requirements for the network, but solving tough challenges such as these is what Juniper has been doing for over 25 years.

For MLPerf Training v4.0, Juniper submitted benchmarks for BERT, DLRM, and Llama2-70B with LoRA fine tuning on a Juniper AI Cluster consisting of Nvidia A100 and H100 GPUs using Juniper’s AI Optimized Ethernet fabric as the accelerator interconnect. For BERT, we optimized pre-training tasks using a Wikipedia dataset, evaluating performance with MLM accuracy. Our DLRM submission utilized the Criteo dataset and HugeCTR for efficient handling of sparse and dense features, with AUC as the evaluation metric, achieving exceptional performance. The Llama2-70B model was fine-tuned using LoRA techniques with DeepSpeed and Hugging Face Accelerate, optimizing gradient accumulation for balanced training speed and accuracy.

Most submissions were made on a multi-node setup, with PyTorch, DeepSpeed, and HugeCTR optimizations. Crucially, we optimized inter-node communication with RoCE v2, ensuring low-latency, high-bandwidth data transfers, which are critical for efficient, distributed training workloads.

Juniper is committed to an operations-first approach to help customers manage the entire data center lifecycle with market-leading capabilities in intent-based networking, AIOps and 800Gb Ethernet. Open technologies such as Ethernet and our Apstra data center fabric automation software eliminate vendor lock-in, take advantage of the industry ecosystem to push down costs and drive innovation, and enable common network operations across AI training, inference, storage and management networks. In addition, rigorously pre-tested, validated designs are critical to ensure that customers can deploy secure data center infrastructure on their own.

Lenovo

Leveraging MLPerf Training v4.0, Lenovo Drives AI Innovation

At Lenovo, we’re dedicated to empowering our customers with cutting-edge AI solutions that transform industries and improve lives. To achieve this vision, we invest in rigorous research and testing using the latest MLPerf Training v4.0 benchmarking tools.

Benchmarking Excellence: Collaborative Efforts Yield Industry-Leading Results

Through our strategic partnership with MLCommons, we’re able to demonstrate our AI solutions’ performance and capabilities quarterly, showcasing our commitment to innovation and customer satisfaction. Our collaborations with industry leaders like NVIDIA and AMD on critical AI applications such as image classification, medical image segmentation, speech-to-text, and natural language processing have enabled us to achieve outstanding results.

ThinkSystem SR685A v3 with 8x NVIDIA H100 (80Gb) GPUs and the SR675 v3 with 8x NVIDIA L40s GPUs: Delivering AI-Powered Solutions

We’re proud to have participated in these challenges using our ThinkSystem SR685A v3 with 8x NVIDIA H100 (80Gb) GPUs and the SR675 v3 with 8x NVIDIA L40s GPUs. These powerful systems enable us to develop and deploy AI-powered solutions that drive business outcomes and improve customer experiences.

Partnership for Growth: MLCommons Collaboration Enhances Product Development

Our partnership with MLCommons provides valuable insights into how our AI solutions compare against the competition, sets customer expectations, and enables us to continuously enhance our products. Through this collaboration, we can work closely with industry experts to drive growth and ultimately deliver better products for our customers, who remain our top priority.

NVIDIA

The NVIDIA accelerated computing platform showed exceptional performance in MLPerf Training v4.0. The NVIDIA Eos AI SuperPOD more than tripled performance on the LLM pretraining benchmark, based on GPT-3 175B, compared to NVIDIA submissions from a year ago. Featuring 11,616 NVIDIA H100 Tensor Core GPUs connected with NVIDIA Quantum-2 InfiniBand networking, Eos achieved this through larger scale and extensive full-stack engineering. Additionally, NVIDIA’s 512 H100 GPU submissions are now 27% faster compared with just one year ago due to numerous optimizations to the NVIDIA software stack.

As enterprises seek to customize pretrained large language models, LLM fine-tuning is becoming a key industry workload. MLPerf added to this round the new LLM fine-tuning benchmark, which is based on the popular low-rank adaptation (LoRA) technique applied to Llama 2 70B. The NVIDIA platform excelled at this task, scaling from eight to 1,024 GPUs. And, in its MLPerf Training debut, the NVIDIA H200 Tensor Core GPU extended H100’s performance by 14%.

NVIDIA also accelerated Stable Diffusion v2 training performance by up to 80% at the same system scales submitted last round. These advances reflect numerous enhancements to the NVIDIA software stack. And, on the new graph neural network (GNN) test based on RGAT, the NVIDIA platform with H100 GPUs excelled at both small and large scales. H200 further accelerated single-node GNN training, delivering a 47% boost compared to H100.

Reflecting the breadth of the NVIDIA AI ecosystem, 10 NVIDIA partners submitted impressive results, including ASUSTek, Dell, Fujitsu, GigaComputing, HPE, Lenovo, Oracle, Quanta Cloud Technology, Supermicro, and Sustainable Metal Cloud.

MLCommons’ ongoing work to bring benchmarking best practices to AI computing is vital. Through enabling peer-reviewed, apples-to-apples comparisons of AI and HPC platforms, and keeping pace with the rapid change that characterizes AI computing, MLCommons provides companies everywhere with crucial data that can help guide important purchasing decisions.

Oracle

Oracle Cloud Infrastructure (OCI) offers AI Infrastructure, Generative AI, AI Services, ML Services, and AI in our Fusion Applications. Our AI infrastructure portfolio includes bare metal instances powered by NVIDIA H100, NVIDIA A100, and NVIDIA A10 GPUs. OCI also provides virtual machines powered by NVIDIA A10 GPUs. By mid-2024, we plan to add NVIDIA L40S GPU and NVIDIA GH200 Grace Hopper Superchip.

The MLPerf Training benchmark results for the high-end BM.GPU.H100.8 instance demonstrate that OCI provides high performance that at least matches that of other deployments for both on-premises and cloud infrastructure. These instances provide eight NVIDIA GPUs per node and the training performance is increased manifold due to RoCEv2 enabling efficient NCCL communications. The benchmarks were done on 1 Node, 8 Node and 16 Node clusters which correspond to 8, 64, 128 NVIDIA H100 GPUs and linear scaling was observed for the benchmarks as we scale from 1 node to 16 nodes. The GPUs are RAIL optimized. The GPU Nodes with H100 GPUs can be clustered using a high performance RDMA network for a cluster of tens of thousands of GPUs.

Quanta Cloud Technology

Quanta Cloud Technology (QCT), a global leader in data center solutions, excels in enabling HPC and AI workloads. In the latest MLPerf Training v4.0, QCT demonstrated its commitment to excellence by submitting two systems in the closed division. These submissions covered tasks in image classification, object detection, natural language processing, LLM, recommendation, image generation, and graph neural network. Both the QuantaGrid D54U-3U and QuantaGrid D74H-7U systems successfully met stringent quality targets.

The QuantaGrid D74H-7U is a dual Intel Xeon Scalable Processor server with eight-way GPUs, featuring the NVIDIA HGX H100 SXM5 module, supporting non-blocking GPUDirect RDMA and GPUDirect Storage. This makes it an ideal choice for compute-intensive AI training. Its innovative hardware design and software optimization ensure top-tier performance.

The QuantaGrid D54U-3U is a versatile 3U system that accommodates up to four dual-width or eight single-width accelerators, along with dual Intel Xeon Scalable Processors and 32 DIMM slots. This flexible architecture is tailored to optimize various AI/HPC applications. Configured with four NVIDIA H100-PCIe 80GB accelerators with NVLink bridge adapters, it achieved outstanding performance in this round.

QCT is committed to providing comprehensive hardware systems, solutions, and services to both academic and industrial users. We maintain transparency by openly sharing our MLPerf results with the public, covering both training and inference benchmarks.

Red Hat + Supermicro

Supermicro, builder of Large-Scale AI Data Center Infrastructure, and Red Hat Inc, the world’s leading provider of enterprise open source solutions, collaborated on this first ever MLPerf Training benchmark that included finetuning of LLM llama-2-70b using LoRA.

GPU A+ Server, the AS-4125GS-TNRT has flexible GPU support and configuration options: with active & passive GPUs, and dual-root or single-root configurations for up to 10 double-width, full-length GPUs. Furthermore, the dual-root configuration features directly attached eight GPUs without PLX switches to achieve the lowest latency possible and improve performance, which is hugely beneficial for demanding scenarios our customers face with AI and HPC workloads.

This submission demonstrates the delivery of performance, within the error bar of other submissions on similar hardware, while providing an exceptional Developer, User and DevOps experience.

Get access to a free 60 day trial of Red Hat OpenShift AI here.

Sustainable Metal Cloud (SMC)

Sustainable Metal Cloud, one of the newest members of ML Commons, is an AI GPU cloud developed by Singapore based Firmus Technologies using its proprietary single-phase immersion platform, named “Sustainable AI Factories”. Sustainable Metal Cloud’s operations are primarily based in Asia, with a globally expanding network of scaled GPU clusters and infrastructure – including NVIDIA H100 SXM accelerators.

Our first published MLPerf results demonstrate that when our customers train their models using our GPU cloud service, they access world-class performance with significantly reduced energy consumption. Our GPT-3 175B, 512 H100 GPU submission consumed only 468 kWh of total energy when connected with NVIDIA Quantum-2 Infiniband networking, demonstrating significant energy savings over conventional air-cooled infrastructure.

We are dedicated to advancing the agenda of energy efficiency in running and training AI. Our results, verified by MLCommons, highlight our commitment to this goal. We are very proud of our GPT3-175B total power result proving our solution scales and significantly reduces overall power use. The significant reduction in energy consumption is primarily due to the unique design of our Sustainable AI Factories.

With AI’s rapid growth, it’s crucial to address resource consumption by focusing opportunities to reduce energy usage in every facet of the AI Factory. Estimates place the energy requirements of new AI capable data centers at between 5-8GWh annually; potentially exceeding the US’s projected new power generation capacity of 3-5GWh per year.

As part of ML Commons, we aim to showcase progressive technologies, set benchmarks for best practices, and advocate for long-term energy-saving initiatives.

tiny corp

In the latest round of MLPerf Training v4.0 (closed division) benchmarks, tiny corp submitted benchmarks for ResNet50. We are proud to be the first to submit to MLPerf Training on AMD accelerators.

Our results show competitive performance between both AMD accelerators and NVIDIA accelerators, widening the choice for users to select the best accelerator, lowering the barrier to entry for high performance machine learning.

This was all achieved with tinygrad, a from scratch, backend agnostic, neural network library that simplifies neural networks down to a few basic operations, that can then be highly optimized for various hardware accelerators.

tiny corp will continue to push the envelope on machine learning performance, with a focus on democratizing access to high performance compute.