Cerebras Demonstrates Trillion Parameter Model Training on a Single CS-3 System

SUNNYVALE, Calif. and VANCOUVER, British Columbia, Dec. 11, 2024 -- At NeurIPS 2024, Cerebras Systems, in collaboration with Sandia National Laboratories, unveiled a significant achievement: the successful training of a 1-trillion-parameter AI model using the Cerebras CS-3 system.

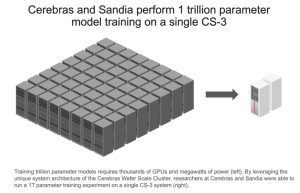

Training trillion parameter models requires thousands of GPUs and megawatts of power (left). By leveraging the unique system architecture of the Cerebras Wafer Scale Cluster, researchers at Cerebras and Sandia were able to run a 1T parameter training experiment on a single CS-3 system (right).

Trillion parameter models represent the state of the art in today’s LLMs, requiring thousands of GPUs and dozens of hardware experts to perform. By leveraging Cerebras’ Wafer Scale Cluster technology, researchers at Sandia were able to initiate training on a single AI accelerator – a one-of-a-kind achievement for frontier model development.

“Traditionally, training a model of this scale would require thousands of GPUs, significant infrastructure complexity, and a team of AI infrastructure experts,” said Sandia researcher Siva Rajamanickam. “With the Cerebras CS-3, the team was able to achieve this feat on a single system with no changes to model or infrastructure code. The model was then scaled up seamlessly to 16 CS-3 systems, demonstrating a step-change in the linear scalability and performance of large AI models, thanks to the Cerebras Wafer-Scale Cluster.”

Trillion parameter models require terabytes of memory — thousands of times more than what’s available on a single GPU. Thousands of GPUs must be procured and connected before being able to run a single training step or model experiment. Cerebras Wafer Scale Cluster uses unique, terabyte-scale external memory device called MemoryX to store model weights, making trillion parameter models as easy to train as a small model on a GPU.

For Sandia’s trillion parameter training run, Cerebras configured a 55 terabyte MemoryX device. By employing commodity DDR5 memory in a 1U server format, the hardware was procured and configured in mere days. AI researchers were able to run initial training steps and observed improving loss and stable training dynamics. After completing the single system run, researchers scaled training to two and sixteen CS-3 nodes with no code changes. The cluster exhibited near linear scaling with 15.3x speedup on sixteen systems. Achieving the above typically requires thousands of GPUs, megawatts of power, and many weeks of hardware and software configuration.

This result highlights the one-of-a-kind power and flexibility of Cerebras hardware. In addition to the industry’s fastest inference performance, Cerebras Wafer Scale engine dramatically simplifies AI training and frontier model development, making it a full end-to-end solution for training, fine-tuning, and inferencing the latest AI models.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to accelerate generative AI by building from the ground up a new class of AI supercomputer. Our flagship product, the CS-3 system, is powered by the world's largest and fastest commercially available AI processor, our Wafer-Scale Engine-3. CS-3s are quickly and easily clustered together to make the largest AI supercomputers in the world, and make placing models on the supercomputers dead simple by avoiding the complexity of distributed computing. Cerebras Inference delivers breakthrough inference speeds, empowering customers to create cutting-edge AI applications. Leading corporations, research institutions, and governments use Cerebras solutions for the development of pathbreaking proprietary models, and to train open-source models with millions of downloads. Cerebras solutions are available through the Cerebras Cloud and on premise.

Source: Cerebras Systems