Intersect360 Research 2024 HPC-AI Software Survey; HPC Is All In

It’s nearly impossible to have a conversation in the high-performance computing (HPC) space (or any space for that matter) without artificial intelligence (AI) creeping into the discussion. The technology’s versatility has skyrocketed its overall deployment, and as such, AI can seem like a miracle cure that’s come to solve all computing problems.

Of course, this is incorrect. While AI is a valuable technology, cutting through the hype is necessary if the HPC community wants to use AI to its full potential.

With this in mind, Intersect360 Research conducted the 2024 HPC-AI Software Survey. This comprehensive survey of operating environments, middleware, and end-user applications stretches across 11 domains, including the adoption of machine learning, AI, and large language models (LLMs). The survey respondents came from various sectors, with 68% coming from Commercial clients, 26% from Academic institutions, and 6% from Government.

This investigation into the real-world use of AI – along with the other topics covered in the survey – sheds light on just how valuable this technology is and where it might be headed.

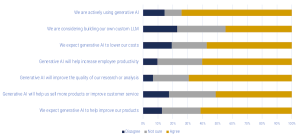

Intersect360 Research 2024 HPC-IA user survey questions and answers. (Source: Intersect360 Research)

AI Engagement and Usage

One of the least surprising pieces of information to come out of the 2024 HPC-AI Software Survey was the large percentage of respondents who are using AI in some form. Answering the question, “Is your organization engaging with AI right now, in any of its forms?” 92% of respondents replied, “Yes.” As one would imagine, AI is everywhere right now.

That said, AI is such a versatile tool that simply asking if an organization is using it isn’t enough. Digging deeper, the survey also asked respondents how they were currently using AI and what they planned to use it for in the future.

Respondents were asked to reply to the following statement: “We have integrated AI or Machine Learning into our HPC applications, such as for computational steering or simulation optimization.” Out of those surveyed, nearly 75% either agreed or strongly agreed with that statement.

Conversely, respondents were also asked to reply to the following statement: “AI/ML are separate from our HPC initiatives and applications.” A little more than 30% responded either “agree” or “strongly agree” to this statement. This comparison shows just how deeply integrated AI/ML tools are in HPC applications. Organizations are finding a lot of use for this technology in their HPC operations.

Looking to the future, it would seem that even those currently not adopting the technology plan will do so soon. Respondents were asked to reply to the following statement: “We have plans to integrate AI/ML into our HPC more closely, but we’re not there yet.” Nearly 65% of respondents either agreed or strongly agreed with that statement, showing that even those who are not currently integrating AI into their HPC applications at least have a desire to do so.

Generative AI and LLMs

While AI has a wide variety of uses, generative AI (GenAI) and LLMs are some of the most discussed applications of this technology. As such, Intersect360 Research devoted a whole section of the 2024 HPC/AI Software Survey to these applications.

The survey found that nearly 75% of respondents agreed with the statement, “We are actively using generative AI.” Nearly 55% of respondents also agreed with the statement, “We are considering building our own custom LLM.” Additionally, nearly 65% of respondents agreed they “expect generative AI to lower our costs.”

While this is only a small snapshot of the overall study, it paints a picture of what organizations are looking for from their GenAI/LLM deployments. It appears that most respondents expect this technology to save them money. Moreover, building custom LLMs is more difficult than using off-the-shelf products. The answers from respondents show that customers want control over their LLM deployments – or, at the very least, they think they do.

The assertion furthers this sentiment that 42% of respondents will be keeping their LLM deployment at their own data center colocation for development and design, 34% for testing, 35% for model training, and 31% during production.

For a full presentation of the survey results (with slides) , see the full Intersect 360 Research Webinar.

This article first appeared on sister site HPCwire.