What Are Reasoning Models and Why You Should Care

The meteoric rise of DeepSeek R-1 has put the spotlight on an emerging type of AI model called a reasoning model. As generative AI applications move beyond conversational interfaces, reasoning models are likely to grow in capability and use, which is why they should be on your AI radar.

A reasoning model is a type of large language model (LLM) that can perform complex reasoning tasks. Instead of quickly generating output based solely on a statistical guess of what the next word should be in an answer, as an LLM typically does, a reasoning model will take time to break a question down into individual steps and work through a “chain of thought” process to come up with a more accurate answer. In that manner, a reasoning model is much more human-like in its approach.

OpenAI debuted its first reasoning models, dubbed o1, in September 2024. In a blog post, the company explained that it used reinforcement learning (RL) techniques to train the reasoning model to handle complex tasks in mathematics, science, and coding. The model performed at the level of PhD students for physics, chemistry, and biology, while exceeding the ability of PhD students for math and coding.

According to OpenAI, reasoning models work through problems more like a human would compared to earlier language models.

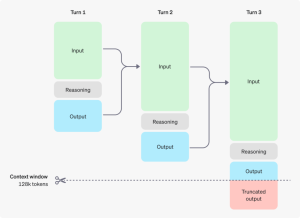

Reasoning models involve a chain-of-thought process that involves additional tokens (Image source: OpenAI)

“Similar to how a human may think for a long time before responding to a difficult question, o1 uses a chain of thought when attempting to solve a problem,” OpenAI said in a technical blog post. “Through reinforcement learning, o1 learns to hone its chain of thought and refine the strategies it uses. It learns to recognize and correct its mistakes. It learns to break down tricky steps into simpler ones. It learns to try a different approach when the current one isn’t working. This process dramatically improves the model’s ability to reason.”

Kush Varshney, an IBM Fellow, says reasoning models can check themselves for correctness, which he says represents a type of “meta cognition” that didn’t previously exist in AI. “We are now starting to put wisdom into these models, and that’s a huge step,” Varshney told an IBM tech reporter in a January 27 blog post.

That level of cognitive power comes at a cost, particularly at runtime. OpenAI, for instance, charges 20x more for o1-mini than GPT-4o mini. And while its o3-mini is 63% cheaper than o1-mini per token, it’s still significantly more expensive than GPT-4o-mini, reflecting the greater number of tokens, dubbed reasoning tokens, that are used during the “chain of thought” reasoning process.

That’s one of the reasons why the introduction of DeekSeek R-1 was such a breakthrough: It has dramatically reduced computational requirements. The company behind DeepSeek claims that it trained its V-3 model on a small cluster of older GPUs that only cost $5.5 million, much less than the hundreds of millions it reportedly cost to train OpenAI’s latest GPT-4 model. And at $.55 per million input tokens, DeepSeek R-1 is about half the cost of OpenAI o3-mini.

The surprising rise of DeepSeek-R1, which scored comparably to OpenAI’s o1 reasoning model on math, coding, and science tasks, is forcing AI researchers to rethink their approach to developing and scaling AI. Instead of racing to build ever-bigger LLMs that sport trillions of parameters and are trained on huge amounts of data culled from a variety of sources, the success we’re witnessing with reasoning models like DeepSeek R-1 suggest that having a larger number of smaller models trained using a mixture of experts (MoE) architecture may be a better approach.

One of the AI leaders who is responding to the rapid changes is Ali Ghodsi. In a recent interview posted to YouTube, the Databricks CEO discussed the significance of the rise of reasoning models and DeepSeek.

“The game has clearly changed. Even in the big labs, they’re focusing all their efforts on these reasoning models,” Ghodsi says in in the interview. “So no longer [focusing on] scaling laws, no longer training gigantic models. They’re actually putting their money on a lot of reasoning.”

The rise of DeepSeek and reasoning models will also have an impact on processor demand. As Ghodsi notes, if the market shifts away from training ever-bigger LLMs that are generalist jacks of all trades, and moves towards training smaller reasoning models that were distilled from the massive LLMs, and enhanced using RL techniques to be experts in specialized fields, that will invariably impact the type of hardware that’s needed.

“Reasoning just requires different kinds of chips,” Ghodsi says in the YouTube video. “It doesn’t require these networks where you have these GPUs interconnected. You can have a data center here, a data center there. You can have some GPUs over there. The game has shifted.”

GPU-maker Nvidia recognizes the shift this could have for its business. In a blog post, the company touts the inference performance of the 50-series RTX line of PC-based GPUs (based on the Blackwell GPUs) for running some of the smaller student models distilled from the larger 671 -billion parameter DeepSeek-R1 model.

“High-performance RTX GPUs make AI capabilities always available–even without an internet connection–and offer low latency and increased privacy because users don’t have to upload sensitive materials or expose their queries to an online service,” Nvidia’s Annamalai Chockalingam writes in a blog last week.

Reasoning models aren’t the only game in town, of course. There is still a considerable investment occurring in building retrieval augmented (RAG) pipelines to present LLMs with data that reflects the right context. Many organizations are working to incorporate graph databases as a source of knowledge that can be injected into the LLMs, what’s known as a GraphRAG approach. Many organizations are also moving forward with plans to fine-tune and train open source models using their own data.

But the sudden appearance of reasoning models on the AI scene definitely shakes things up. As the pace of AI evolution continues to accelerate, it would seem likely that these sorts of surprises and shocks will become more frequent. That may make for a bumpy ride, but it ultimately will create AI that’s more capable and useful, and that’s ultimately a good thing for us all.

This article first appeared on sister site BigDATAwire.

Related

Alex Woodie has written about IT as a technology journalist for more than a decade. He brings extensive experience from the IBM midrange marketplace, including topics such as servers, ERP applications, programming, databases, security, high availability, storage, business intelligence, cloud, and mobile enablement. He resides in the San Diego area.