AI inference

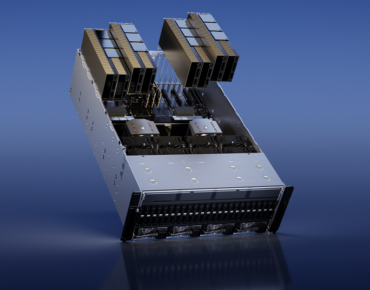

DeltaAI Unveiled: How NCSA Is Meeting the Demand for Next-Gen AI Research

The National Center for Supercomputing Applications (NCSA) at the University of Illinois Urbana-Champaign has just launched its highly anticipated DeltaAI system. DeltaAI is an advanced AI computing and data ...Full Article

The Great 8-bit Debate of Artificial Intelligence

A grand competition of numerical representation is shaping up as some companies promote floating point data types in deep learning, while others champion integer data types. Artificial Intelligence Is ...Full Article

Nvidia Tees Up New Platforms for Generative Inference Workloads like ChatGPT

Today at its GPU Technology Conference, Nvidia discussed four new platforms designed to accelerate AI applications. Three are targeted at inference workloads for generative AI applications, including generating text, ...Full Article

What Enterprises Need to Know to Get AI Inference Right

AI is rapidly shifting from a technology used by the world’s largest service providers – like Amazon, Google, Microsoft, Netflix and Spotify – to becoming a tool that midsize ...Full Article

Better, More Efficient Neural Inferencing Means Embracing Change

Artificial intelligence is running into some age-old engineering tradeoffs. For example, increasing the accuracy of neural networks typically means increasing the size of the networks. That requires more compute ...Full Article

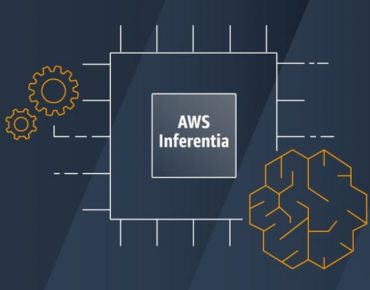

AWS-Designed Inferentia Chips Boost Alexa Performance

Almost two years after unveiling its Inferentia high-performance machine learning inference chips, Amazon has almost completed a migration of the bulk of its Alexa text-to-speech ML inference workloads to ...Full Article

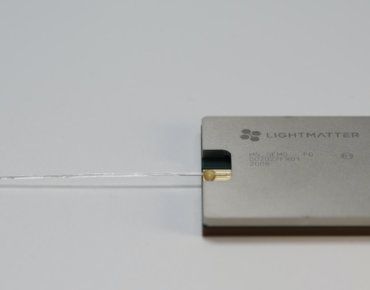

Photonics Processor Aimed at AI Inference

Silicon photonics is exhibiting greater innovation as requirements grow to enable faster, lower-power chip interconnects for traditionally power-hungry applications like AI inferencing. With that in mind, scientists at Massachusetts ...Full Article

Xilinx Keeps Pace in AI Accelerator Race

FPGAs are increasingly used to accelerate AI workloads in datacenters for tasks like machine learning inference. A growing list of FPGA accelerators are challenging datacenter GPU deployments, promising to ...Full Article

AI Inference Benchmark Bake-off Puts Nvidia on Top

MLPerf.org, the young AI-benchmarking consortium, has issued the first round of results for its inference test suite. Among organizations with submissions were Nvidia, Intel, Alibaba, Supermicro, Google, Huawei, Dell ...Full Article

At Hot Chips, Intel Shows New Nervana AI Training, Inference Chips

At the Hot Chips conference last week, Intel showcased its latest neural network processor accelerators for both AI training and inference, along with details of its hybrid chip packaging ...Full Article